Why does proper sampling matter in human TTS evaluation?

TTS

Research

Speech AI

In the quest to perfect Text-to-Speech systems, proper sampling is not just a matter of preference; it is a critical component that defines the boundary between failure and success. Misjudged samples can lead to TTS models that sound impressive in controlled environments but falter in real-world applications. Evaluating a model without representative sampling is like judging a book by its cover. Without exploring its depth, the real story remains hidden.

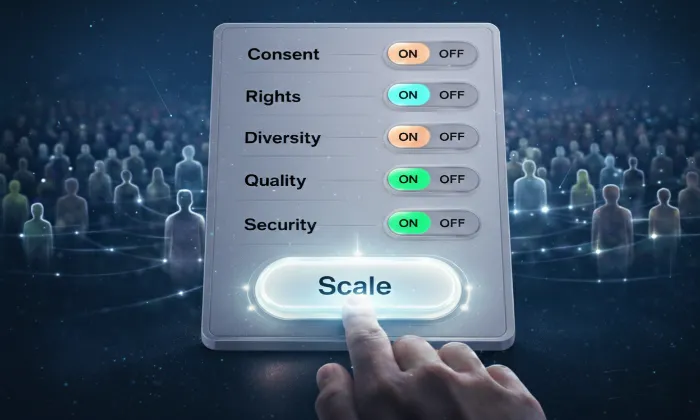

The Pillars of Effective TTS Sampling

Diversity as a Foundation: A robust TTS evaluation must reflect the diversity of human speech. Sampling should include varied demographics, dialects, accents, and emotional tones. For example, a TTS model designed for global customer support should be tested across multiple English accents and different emotional contexts such as urgency, neutrality, and reassurance. Without this diversity, the model risks appearing competent in narrow conditions while failing broader user groups.

Contextual Relevance: Sampling should align directly with the model’s intended use case. A voice assistant benefits from conversational prompts, while an audiobook system requires narrative passages rich in expression. Contextual misalignment produces misleading results and creates gaps between evaluation performance and real-world experience.

Precision in Subtlety: Subtle attributes such as prosody, stress patterns, and intonation significantly influence perceived quality. A model that performs well in neutral delivery may struggle with expressive or emotionally sensitive content. Sampling should deliberately include scenarios that test empathy, urgency, and tonal variation to uncover these weaknesses.

Sampling Frequency and Size: Evaluation cannot rely on a single snapshot. Regular and varied sampling helps detect silent regressions and performance drift. Ongoing assessments ensure that updates, fine-tuning, and domain expansion do not introduce hidden quality declines.

Rigorous Quality Control: Sampling must be supported by structured quality control. Verifying metadata consistency, rotating test sets, and embedding structured feedback loops ensure that samples remain representative and unbiased. Without these safeguards, evaluation results can provide false confidence.

Real-World Implications and Strategic Application

Proper sampling forms the foundation of reliable TTS evaluation. When diversity, contextual alignment, and structured oversight are embedded into sampling strategies, teams gain a realistic understanding of model performance. This reduces the risk of overestimating quality based on narrow or overly controlled conditions.

FutureBeeAI supports organizations in implementing structured and context-aware sampling methodologies that strengthen evaluation accuracy. By adopting disciplined sampling strategies, teams can ensure their TTS systems resonate authentically with diverse users and evolving real-world expectations.

If you are ready to refine your sampling techniques and ensure your evaluation reflects genuine user diversity, connect with our team to explore tailored solutions for your AI initiatives.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!