AI Data Readiness

Scaling AI Data

8 Ethical Readiness Questions Teams Ask Before Scaling AI Data

Eight structural questions to assess AI data readiness before scaling AI data, covering consent, rights, diversity, quality, traceability, and security.

AI Data Readiness

Scaling AI Data

Eight structural questions to assess AI data readiness before scaling AI data, covering consent, rights, diversity, quality, traceability, and security.

There is a specific point in every AI program where things look deceptively stable. The pilot dataset performed well. Annotation quality is high. Early model metrics are promising. Leadership is aligned. The roadmap moves from experiment to expansion. Technically, everything seems ready.

And yet experienced teams hesitate.

Because scaling AI data is not just about increasing volume. It is about exposing every hidden weakness in the data layer. What worked smoothly for a few hundred contributors can quietly fracture when thousands enter the pipeline. What feels manageable through manual oversight becomes fragile when automation, reuse, and cross-team collaboration accelerate.

This is where AI data readiness becomes real. Not at the infrastructure layer. Not at the strategy layer. At the dataset layer itself.

Before expanding multilingual collections, retraining models, or increasing contributor pools, strong teams ask a harder question: Is our dataset ethically and operationally designed to survive scale?

Below are the eight questions that matter most when that moment arrives.

When AI systems scale, failure rarely begins with model performance. It begins with data governance.

Consent becomes vague.

Scope boundaries blur.

Contributor rights become difficult to enforce.

Diversity skews under convenience pressure.

Metadata definitions drift across teams.

Audit trails become harder to reconstruct.

Access expands quietly beyond necessity.

These fractures do not appear overnight. They accumulate slowly as datasets grow, as reuse increases, and as organizational complexity expands. At a small scale, teams compensate manually. At production scale, those compensations stop working.

Ethical AI data is not a policy statement. It is operational infrastructure. And infrastructure either survives load or it does not.

With that context, here are the eight questions experienced teams ask before scaling AI data.

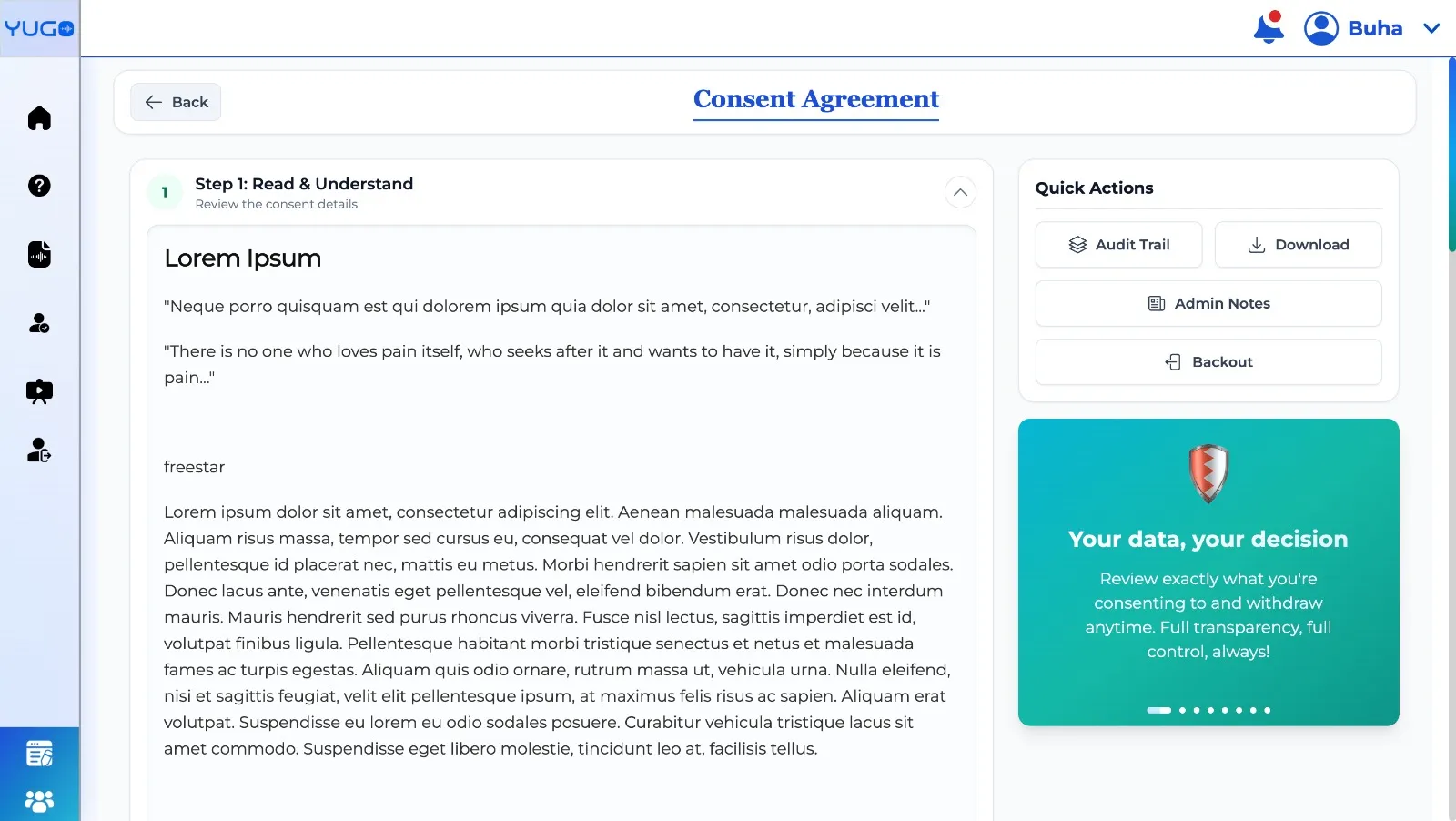

Consent feels clear when participation is limited. A small group of contributors reads a document, signs it, and submits data. Oversight feels personal. But when participation expands from hundreds to thousands, consent cannot remain manual or informal. It must become system-driven through structured consent workflows.

At scale, the first question is whether consent is captured individually or abstractly. Is each contributor logging in through assigned credentials? Is their email verified? Are they reviewing detailed project information before signing a project-specific consent? And critically, can they access the data collection interface without completing that step?

Consent that can be bypassed is not consent that survives scale.

Equally important is traceability. Is each consent record stored as a discrete artifact tied to a specific contributor account? Can it be connected directly to the dataset being built? Without that linkage, it becomes impossible to defend the integrity of downstream usage.

Understanding matters as much as signature. Legal text alone is insufficient. Contributors should encounter non-legal language explainers and accessible user rights information so that agreement is informed, not rushed. Consent management in AI must be systematic, traceable, and understandable. Otherwise it quietly degrades as throughput increases.

In sensitive domains such as healthcare speech projects, this distinction becomes critical. If contributors are collecting de-identified doctor–patient conversation recordings, generic “AI training” language is not enough. They must explicitly acknowledge that the task involves handling health-related content aligned with GDPR and HIPAA principles before participating. Scale does not reduce that responsibility. It amplifies it.

As datasets mature, reuse becomes inevitable. Models are retrained. Fine-tuned across versions. Adapted to new tasks. Embedded into new systems. What was initially a research project can evolve into commercial deployment.

If consent language remains generic, scope expands silently.

A robust consent framework must explicitly define whether data is collected for research, commercial use, retraining across model versions, or derivative model development. It must also define retention periods and licensing boundaries. Is usage perpetual or time-bound? What happens when the retention window closes?

Just as important as use cases are non-use cases. If a dataset is collected for speech recognition, it cannot quietly migrate into biometric surveillance applications. If voice cloning is not permitted, that restriction must be explicit. Clear consent lineage means that when someone opens a dataset months later, they understand not only what they can do with it but also what they cannot.

Scaling AI data without explicit scope clarity introduces long-term legal and ethical ambiguity. Clarity at collection prevents silent expansion later.

Ethics does not end at onboarding. It becomes more complex afterward.

At a small scale, handling deletion or withdrawal requests manually is manageable. At large scale, contributor rights in AI datasets must be operationally embedded.

Can contributors log into their account and withdraw from a project without friction? Can they submit a deletion request without navigating multiple layers of support? When such a request is made, is the data immediately flagged for no further use? Is deletion executed within GDPR-aligned timelines? Is confirmation provided?

Ease of exit must match ease of entry. If joining the dataset takes one click but leaving it requires escalation, rights become symbolic.

The deeper question is systemic. Once data flows across annotation teams, QA pipelines, and client delivery channels, who owns enforcement? Are contributor rights preserved as data moves downstream? Or do they depend on informal coordination?

When scaling AI data, rights enforcement must move from policy to workflow. Anything less becomes unstable under volume.

Diversity does not sustain itself under scale. It erodes under convenience.

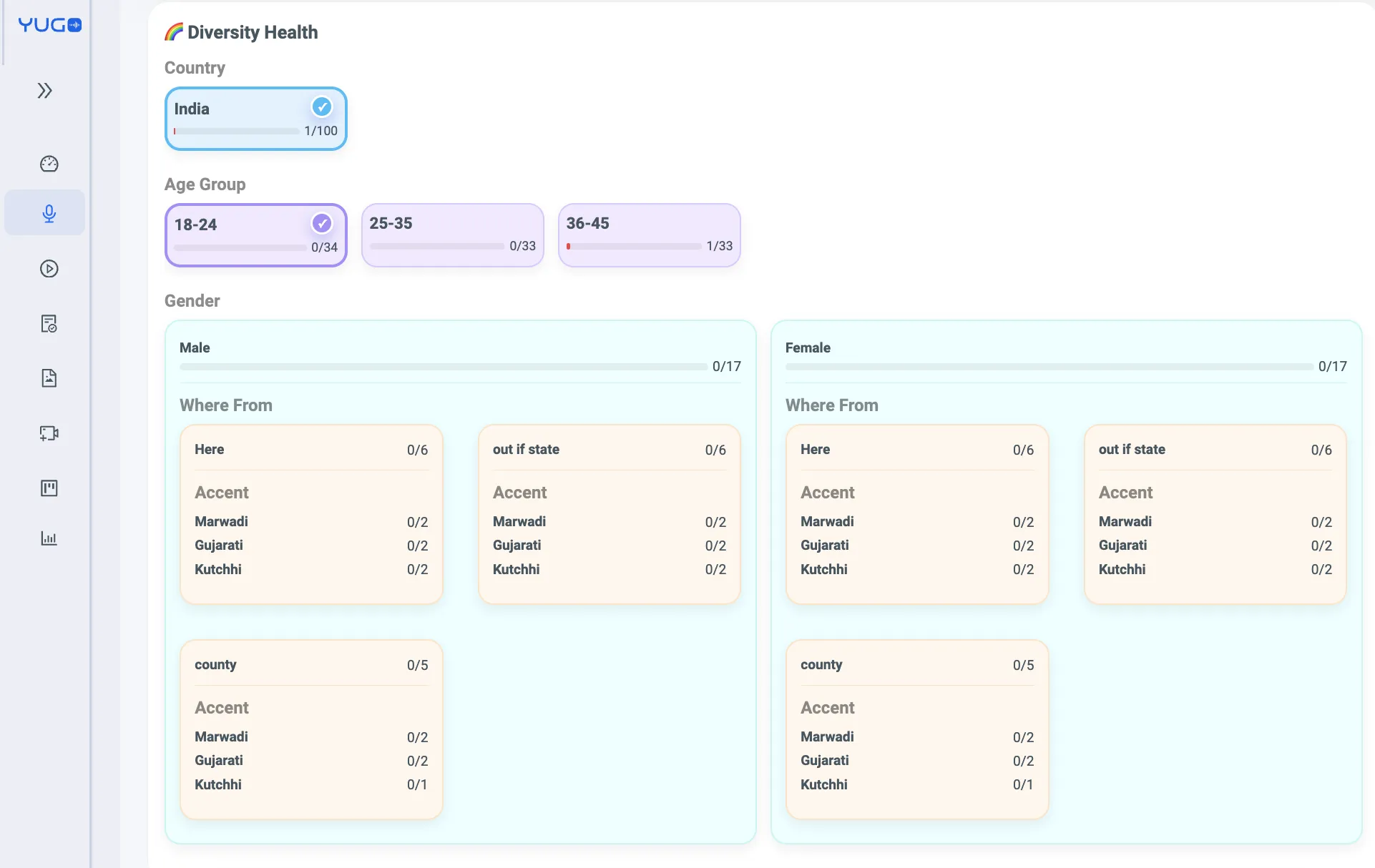

In multilingual AI datasets especially, representation must be designed before collection begins. Geography, gender distribution, age brackets, device type, accent variation, and other relevant parameters should be collaboratively defined during project planning. Diversity targets should not exist only in spreadsheets. They must be operationalized.

As contributors onboard and submit demographic information, live dashboards should surface hiring, participation, and completion rates across diversity brackets. This allows skew to be detected during collection rather than discovered after delivery.

Without active monitoring, easy-to-collect profiles accumulate. Harder-to-reach segments lag. Over time, this imbalance compounds. A dataset that looked balanced at 1,000 contributors can become skewed at 20,000 if mid-course correction is absent.

Representation is not aspirational. It is measurable. And scaling AI data without live diversity tracking risks building structural bias into the foundation of the model itself.

As contributor volume increases, so does pressure for throughput. Speed begins competing with correctness.

AI dataset quality at scale depends on clarity, customization, and feedback loops. Instructions that work in one demographic context may create friction in another. For example, localizing a data collection interface into a contributor’s native language can significantly improve comprehension and reduce annotation errors. Usability is not cosmetic. It is quality control.

Guidelines must also evolve deliberately. When initial data batches are delivered, client feedback often reveals edge cases or ambiguities. Updates to instructions should be implemented systematically across the platform, not informally communicated. Otherwise consistency breaks across contributor groups.

Ambiguous samples are another fault line. If contributors can silently skip unclear items, edge cases disappear instead of being resolved. Requiring a reason for skip creates visibility. Dedicated review teams can then analyze patterns and refine guidelines in collaboration with clients.

Clarity prevents inconsistency. Without structured quality controls, scaling AI data risks trading reliability for speed.

Metadata is the structural memory of a dataset. Without it, governance collapses.

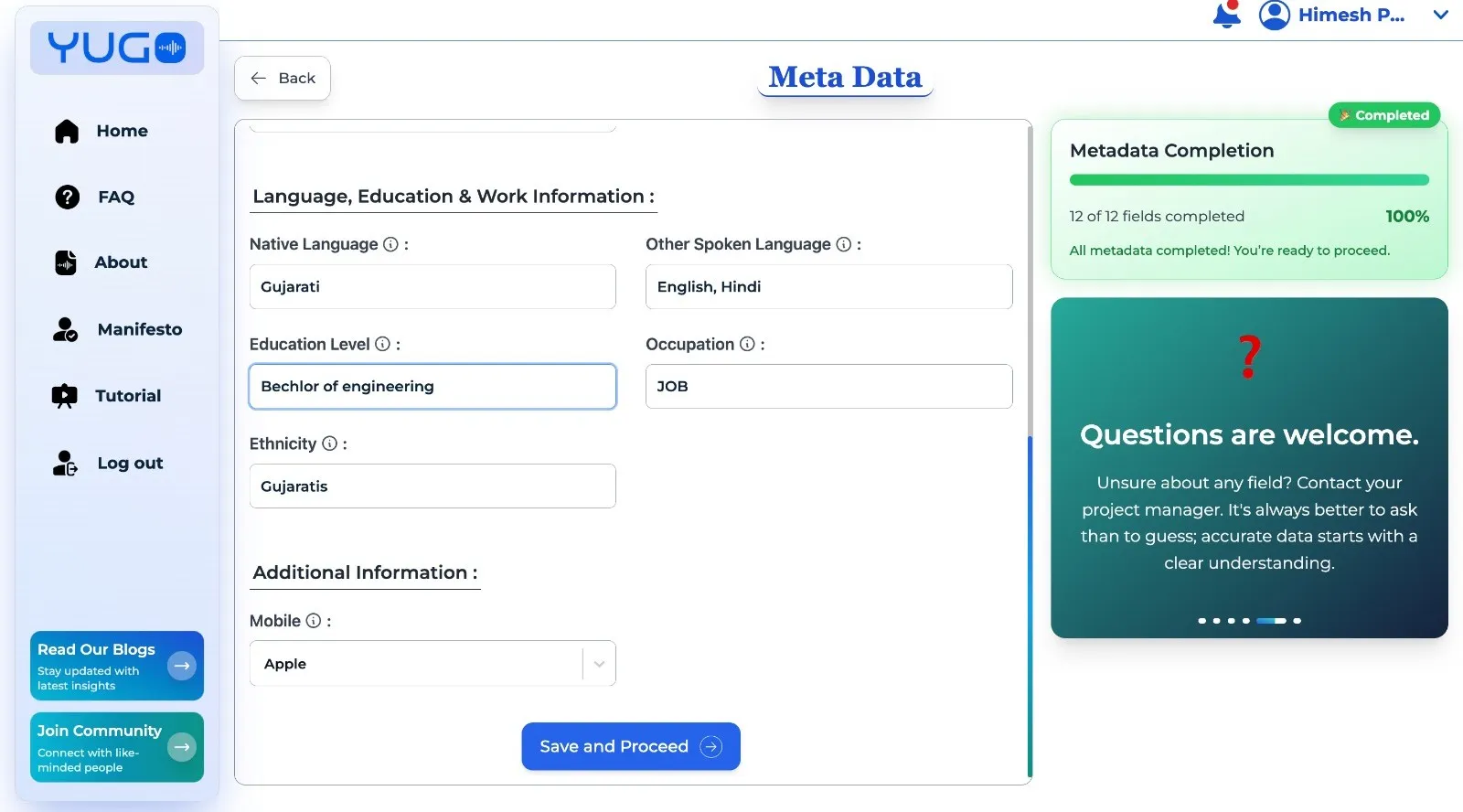

Before large-scale collection begins, metadata definitions should be finalized in collaboration with stakeholders. Two principles guide this stage: collect what is necessary, and avoid collecting data “just in case.” Ethical alignment requires restraint. Excessive metadata collection increases both compliance burden and contributor risk.

Once defined, metadata fields should be structured within the platform using controlled formats such as dropdowns, fixed values, and validation rules. Automated metadata like consent version, dataset version, collection dates, and QA timestamps should be consistently captured. This prevents spelling inconsistencies, formatting drift, and semantic confusion over time.

Contributors should also understand why metadata matters. Informational prompts that explain the purpose of specific fields encourage thoughtful input rather than mechanical completion.

As projects extend across months or years, and as new teams interact with legacy datasets, consistency becomes crucial. AI data governance depends on metadata that retains meaning across time, not just at the moment of collection.

When models evolve, traceability becomes non-negotiable.

Every data point should be linkable to a contributor identity, a specific consent version, and a dataset version. This connection creates data provenance that supports audit resilience. If questions arise months later about usage scope or authorization, reconstruction should be possible without ambiguity.

Dataset versioning should document changes over time. When new data is added, when annotations are refined, or when subsets are extracted for new use cases, those transformations should be recorded. Dataset traceability is not a theoretical compliance language. It is a practical defense against uncertainty.

Without clear lineage, scaling AI data introduces compounding opacity. With it, teams retain the ability to explain, justify, and audit decisions long after collection ends.

Security failures at scale are ethical failures.

AI data security must be embedded at every stage of the workflow. Access should be role-based and time-bound. Contributors and QA reviewers should only see the data necessary for their assigned tasks, and only for the required duration. Assigning limited batches rather than full datasets reduces exposure risk.

Processing should occur within controlled environments. Data should not be downloaded to uncontrolled personal devices. Uploads should move directly into secure server infrastructure rather than through informal channels.

Retention policies must align with what was defined in consent. If usage rights are time-bound, storage must reflect that boundary. If clients receive datasets under specific licensing constraints, those constraints must be mirrored operationally.

Security is not a document. It is an access architecture. And when scaling AI data, access must equal necessity. Nothing more.

Frameworks such as Google PAIR emphasize that responsible AI development requires aligning data practices, transparency, and user-centered safeguards from the start rather than retrofitting them after deployment.

When these eight areas are engineered deliberately, AI data readiness becomes visible.

Consent is embedded into onboarding workflows and tied to specific dataset versions. Usage scope is clearly defined, including non-use cases. Contributor rights are automated and enforceable. Diversity targets are configured and monitored in real time. Quality controls evolve through structured feedback loops. Metadata is structured, minimal, and versioned. Traceability connects every data point to its origin. Security is implemented through role-based, time-bound access inside controlled environments.

This does not eliminate complexity. It contains it.

FutureBeeAI approaches dataset design with this infrastructure mindset. We are multilingual. Multi-domain, multi-modality datasets are built through structured workflows inside our platform so that consent, diversity, metadata, and security remain connected from collection to delivery. Ethical AI data is not treated as an overlay. It is embedded into the process.

Learn more about our approach on the FutureBeeAI homepage or explore related insights on the FutureBeeAI Blog.

AI data readiness is not about slowing innovation. It is about preventing silent erosion as scale accelerates.

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!