Introduction

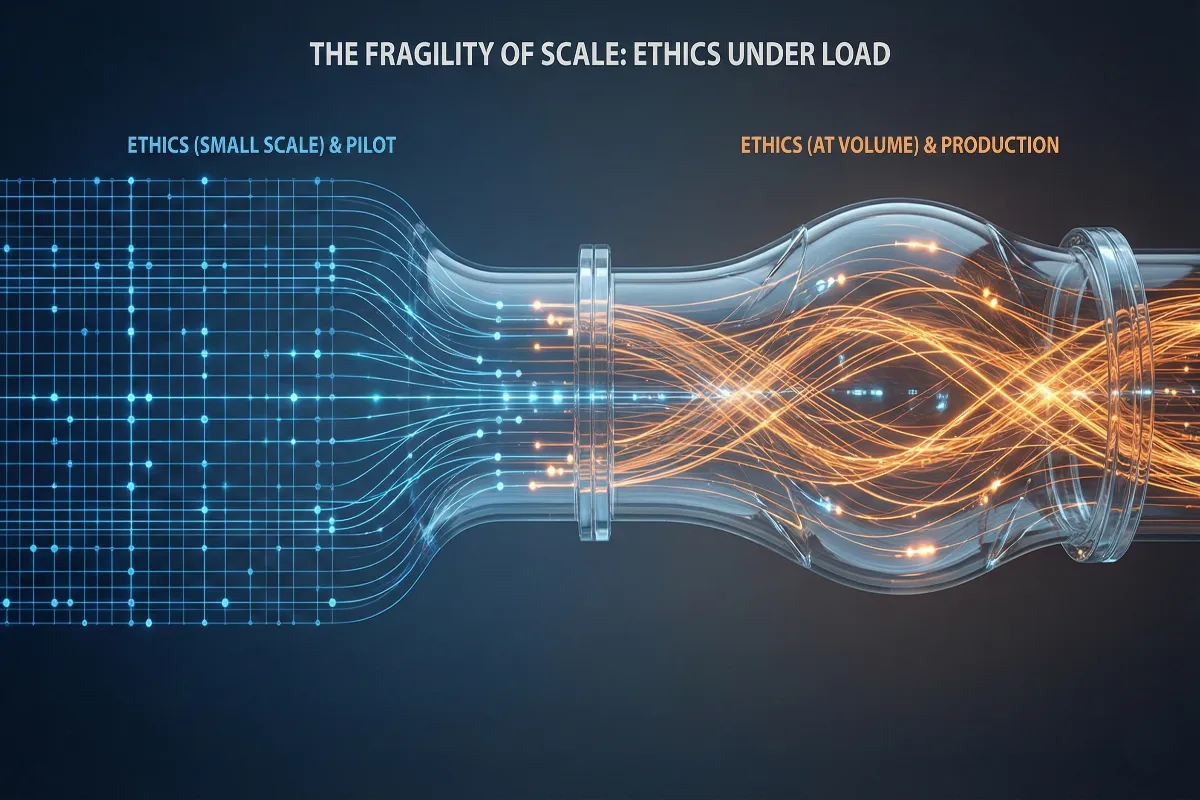

Most AI teams never notice the moment ethics stops being guaranteed.

There is no alert for it. No failed experiment. No internal escalation. Models continue to train. Benchmarks still improve. Deadlines are met. From the outside, everything appears to be working exactly as it should.

And yet, months later, someone asks a simple question and no one has a clean answer. Where exactly did this subset come from? Which consent terms applied when it was collected? Can this data still be used in the way it is being used today?

The first dataset rarely raises these concerns. It usually feels like a quiet success. A few thousand samples. Contributors verified. Consent clearly recorded. Metadata filled in with care. Every file traceable, every decision deliberate. Teams feel proud not only of the data quality, but of the process behind it.

Then pressure arrives.

Timelines compress. The dataset needs to grow tenfold. New regions are added. New languages enter the pipeline. Manual checks are replaced with automation. Review shifts from every sample to statistical coverage. Nothing breaks. Nothing looks unethical. Nothing “goes wrong” all at once.

But ethical guarantees begin to thin.

This is how ethical AI fails in production. Not through scandal or negligence, but through quiet erosion. Intent remains intact. Systems struggle to carry it forward. This is not a moral failure. It is a systems failure, revealed by scale.

Ethics feels natural when everything is small

Ethical AI often feels effortless at the beginning, and that feeling is not misleading. It is situational.

In small teams, humans still touch almost everything. The people designing the data collection versus process are often the same ones reviewing samples, speaking with contributors, and understanding context directly. Oversight is personal rather than procedural. Judgment is immediate. Memory fills the gaps where documentation is still light.

In this phase, ethics is enforced through proximity. Responsibility lives in people’s heads. If something feels wrong, someone notices. Ethical behavior is reinforced by familiarity and emotional ownership rather than by formal systems.

This works surprisingly well, for a while.

But it depends on conditions that do not survive growth. As datasets expand, teams grow, and operations spread across regions and time zones, human memory becomes unreliable. Judgment fragments. Responsibility diffuses. Process replaces proximity, often before those processes are mature enough to preserve ethical intent.

What felt intuitive at a small scale becomes fragile at volume. Early ethics is human-powered. Sustained ethics requires something more durable.

The first cracks no one intends to create

Ethical erosion rarely starts with a bad decision. It starts with reasonable ones.

An onboarding step is simplified to meet volume targets. A validation rule is relaxed because it slows throughput. A metadata field is made optional to avoid blocking progress. An assumption from an earlier batch is reused without being revisited.

Each choice makes sense on its own. Together, they compound.

The driver here is not indifference, but pressure. No one decides to act unethically. They decide to move faster, unblock a pipeline, and fix edge cases later. Ethics is not dismissed. It is deferred.

This is the inflection point most teams miss.

Ethical responsibility quietly shifts from something enforced by judgment to something enforced by systems. And once shortcuts are embedded into workflows, they scale automatically. Intent does not.

When scale redefines what responsible means

As AI systems grow, responsibility itself changes shape.

At small volumes, ethical behavior is guided largely by intention. Teams care, and that care shows up in day-to-day decisions.

As datasets move into the hundreds of thousands, ethics becomes a question of consistency. Processes must behave the same way every time, regardless of who is running them or which region the data comes from.

At a large scale, ethical AI becomes a systems discipline.

Manual review turns into sampling. Individual judgment turns into rules. Trust turns into auditability. Human memory turns into documentation. Responsibility moves away from what teams mean to do and toward what their systems can guarantee under pressure.

This shift is not philosophical. It is operational. Ethical AI at scale is defined less by intent and more by the reliability of the structures carrying that intent forward like a challenge closely tied to AI traceability and governance in real production systems.

When ethical guarantees begin to weaken, metadata is usually the earliest signal.

The data itself may still look healthy. Audio remains clear. Images appear usable. Text reads as expected. Model performance may even improve. From the outside, nothing appears broken.

Underneath, the structure starts to erode.

Consent timestamps become inconsistent. Contributor attributes lose precision. Labels drift across batches. Lineage becomes harder to reconstruct. The dataset still functions, but it no longer fully knows where it came from.

This is where ethical guarantees break down.

Without lineage, consent cannot be verified with confidence. Without traceability, deletion and revocation requests become risky. Without metadata integrity, audits turn into reconstruction exercises. Loss of lineage is loss of ethics, and metadata decay is often the first measurable sign that systems are no longer preserving responsibility at scale, a pattern that becomes especially visible in regulated industries where data transparency is non-negotiable. How consent, governance, and compliance are handled in practice is outlined here.

The quiet human cost of ethical erosion

When metadata and lineage weaken, the impact is not abstract.

Contributors lose clarity over where their data is used and under what terms. Revocation requests become difficult to honor with certainty. Consent, once explicit, turns into an assumption carried forward by default.

This is not dramatic harm, but it is real. Ethical erosion reduces accountability precisely where it matters most: at the boundary between systems and the people who supply the data that makes those systems possible.

The longer this ambiguity persists, the harder it becomes to restore trust, internally or externally. Ethical failures at scale often show up first as uncertainty, long before they show up as violations, particularly when informed consent in AI data collection is not treated as a living, traceable process.

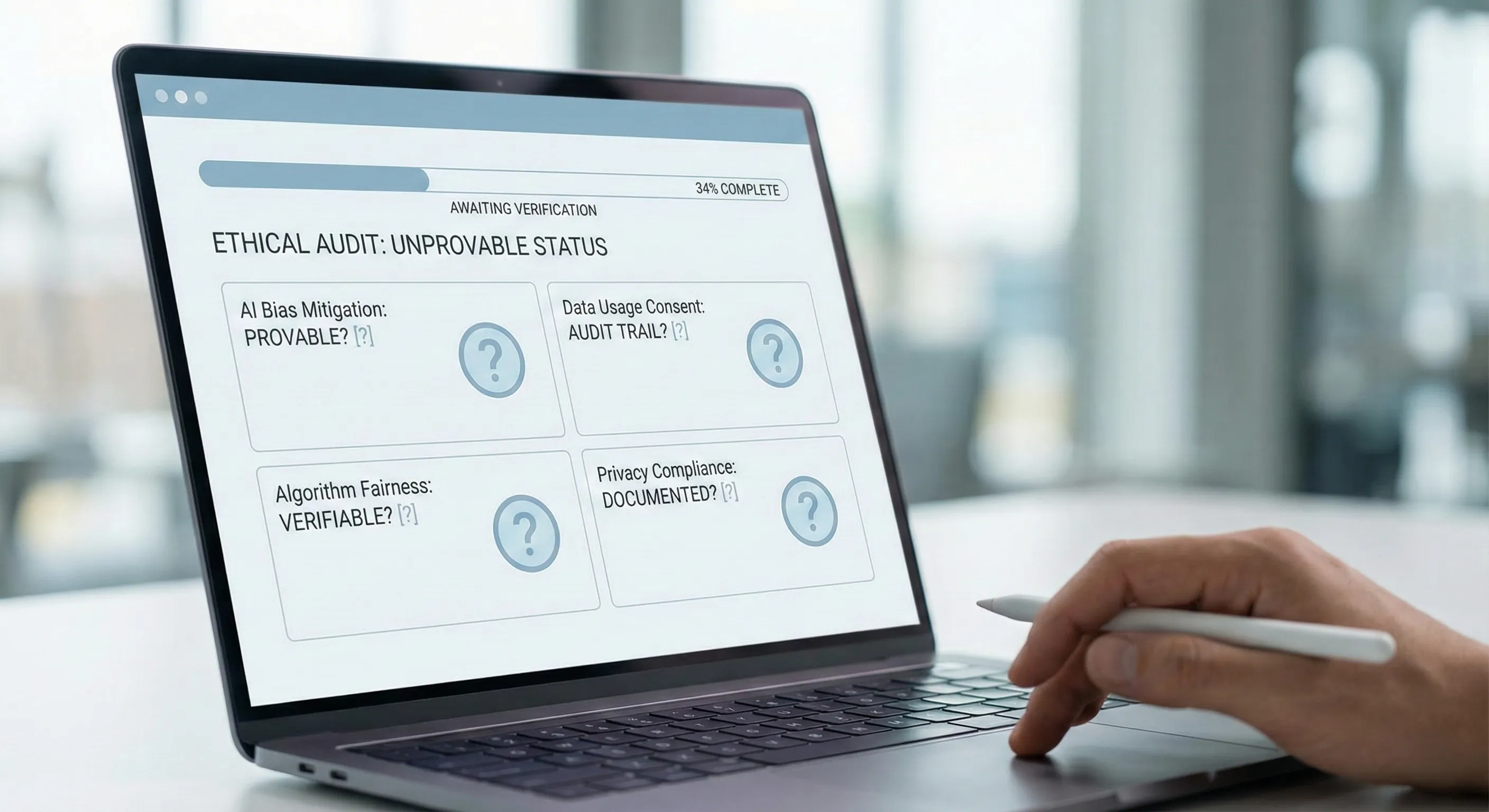

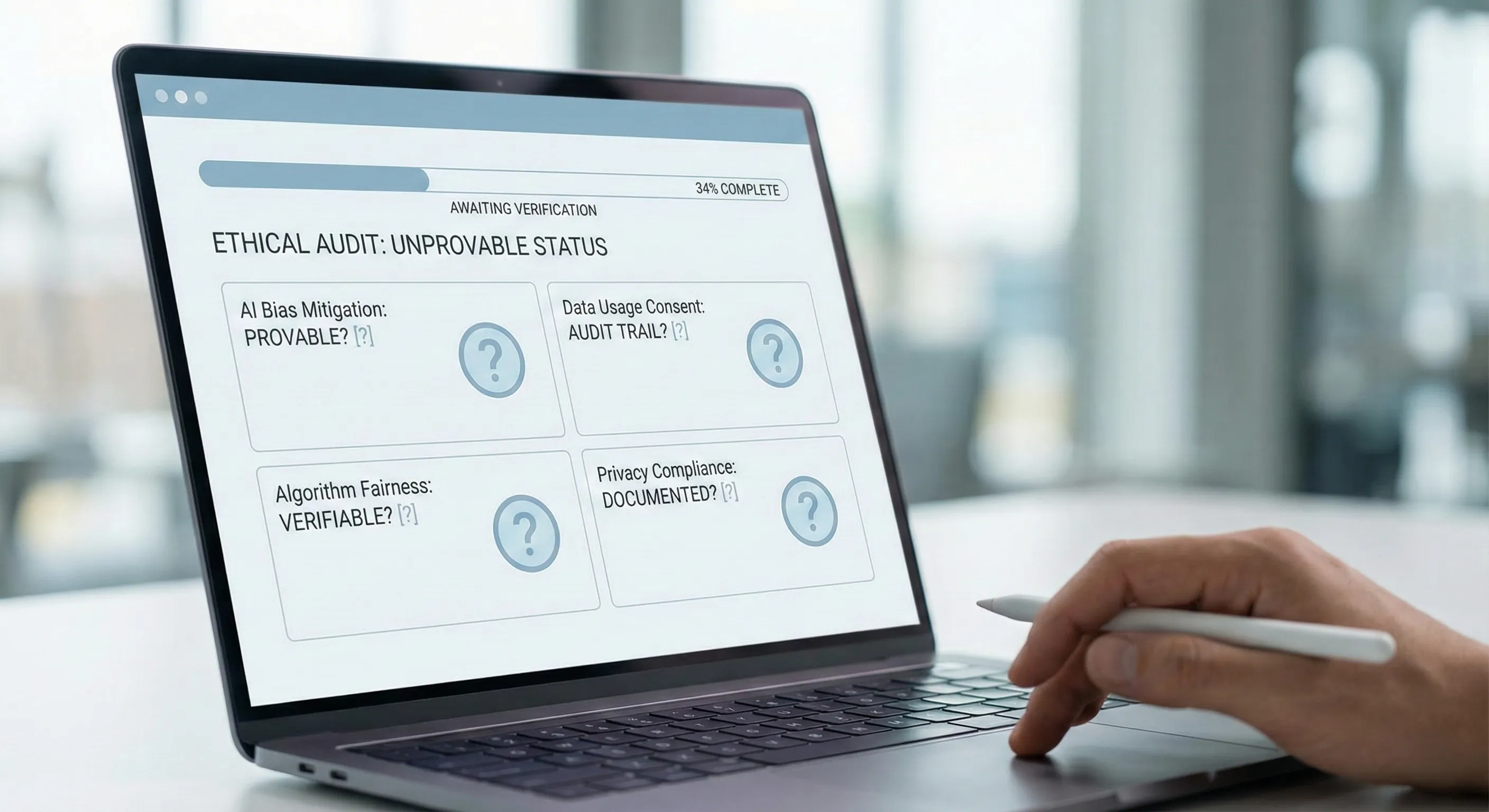

When ethics turns into a liability

Over time, teams grow cautious about their own ethical claims.

Not because they care less, but because they are no longer sure they can demonstrate them. Quiet questions start surfacing internally. Can we prove consent for this subset? Can we remove a contributor’s data reliably if requested? Can we explain how this dataset was built months from now?

When systems cannot answer these questions clearly, ethics stops feeling like a principle and starts feeling like a risk.

Ethics only feels expensive when it is undocumented.

At this stage, teams are not afraid of doing the wrong thing. They are afraid of being unable to prove the right one. That fear signals that ethical responsibility has not been operationalized deeply enough to survive growth.

Designing processes that do not break under scale

Avoiding this outcome requires a shift in how ethics is operationalized.

At scale, ethical AI depends on processes that behave predictably under pressure. Consent cannot be interpreted differently per project. It must be captured and applied consistently across contributor types and regions. Metadata cannot be “best effort.” It must be mandatory, with clear rules for how missing or inconsistent information is handled.

Versioning becomes essential. Teams need to know not just what data exists, but which version is being used, under which assumptions, and with what constraints. Deletion and revocation paths must be defined before they are needed, not improvised when requests arrive.

These processes often feel heavy early on. They introduce friction when teams are small and speed feels critical. But they prevent ethical erosion later, when scale makes ad-hoc fixes impossible.

The goal is not perfection, it is repeatability. Processes that survive scale remove discretion where discretion turns into risk.

Processes alone are not enough if the tools supporting them cannot carry the load.

At smaller scale, teams rely on spreadsheets, scripts, and manual tracking. These approaches work until complexity overwhelms them. As datasets expand across languages and regions, informal tooling collapses under volume.

Ethical tooling does not create ethical intent. But it determines whether that intent can be enforced consistently.

In practice, this means traceability that is preserved by default, not reconstructed later. Auditability that reflects the system’s actual state, not a best-effort snapshot. Consistency in how metadata is captured and interpreted across multilingual datasets, where assumptions cannot be shared implicitly. IEEE Ethics Guidelines for AI Systems.

Modern contributor and metadata workflows are increasingly handled through structured platforms such as Yugo, FutureBeeAI’s AI data platform: When tools are introduced too late, they accelerate failure rather than prevent it. Tooling is not an optimization layer. It is part of the ethical infrastructure that decides whether systems can remember what people no longer can.

The reframe and the real question

Ethical AI does not fail because teams stop caring.

It fails because caring is not a scalable architecture.

People change roles. Teams grow. Systems expand. Belief fades. Infrastructure persists. If ethics lives only in human judgment, it disappears under pressure. If it lives in systems, it survives.

That is the reframe required when moving from pilot to production.

The question is no longer whether a team has ethical intent.

It is whether their systems can still demonstrate that intent when scale, complexity, and pressure increase.

If you are building or buying AI at scale, contact FutureBeeAI.

The most important question is not “are we ethical?”

It is “Can our systems still prove it when pressure increases?”