How can LLMs be trained on specialized domains like medicine or law?

Domain Specific Data

LLM

Fine Tuning

Large Language Models (LLMs) can be effectively adapted to specialized fields such as medicine and law through a strategic combination of dataset design, domain adaptation, and expert input. The following are key approaches that help LLMs deliver domain-relevant performance across complex use cases.

Leveraging Domain-Specific Data

The foundation of domain adaptation lies in the use of relevant textual data:

- Domain-specific datasets: Curate and incorporate corpora that reflect the target domain, such as peer-reviewed medical journals, legal contracts, clinical records, or statutory documents. FutureBeeAI offers healthcare and BFSI domain speech datasets suited for this purpose, including Healthcare Call Center Speech Data and BFSI Call Center Speech Data.

- Domain-specific vocabulary: Embed specialized terminology and linguistic constructs into the training pipeline using curated data gathered through speech data collection services, enabling field-specific language alignment.

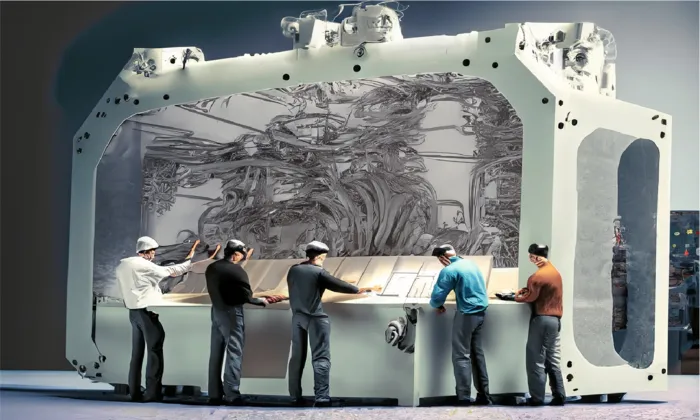

Fine-Tuning for Precision

Pre-trained LLMs can be refined using techniques designed to adjust model behavior toward a target domain:

- Fine-tuning: Retrain the model on domain-aligned datasets, allowing it to internalize context, syntax, and semantics unique to the field. This is particularly useful when supported by structured AI/ML data collection.

- Domain-specific pre-training: Enhance the model's foundational understanding using large-scale, domain-relevant corpora before performing fine-tuning on a specific task.

Enhancing Relevance Through Adaptation Techniques

Several learning strategies help increase adaptability across complex domains:

- Transfer learning: Apply learned representations from general tasks to improve performance in specialized use cases with limited labeled data.

- Multi-task learning: Train on related tasks such as classification and question answering simultaneously to reinforce contextual understanding.

- Prompt engineering: Construct optimized prompts that guide model behavior according to domain-specific intent and structure.

Quality Through Expert-Led Annotation

In regulated or high-stakes sectors, expert-driven supervision ensures dataset accuracy:

- Expert annotations: Engage qualified professionals to label content with context-sensitive precision. FutureBeeAI supports this through its audio annotation services, ideal for capturing the nuances of clinical, legal, and technical discourse.

Outcome

By combining domain-specific datasets, expert annotations, and adaptable learning strategies, LLMs can be trained to interpret and generate accurate, context-aware responses within specialized fields. These approaches are critical for applications such as clinical decision support, contract summarization, or compliance monitoring.

With structured pipeline support from FutureBeeAI, including speech data resources and the YUGO data platform, enterprises can build models that meet the precision, trust, and compliance standards demanded in domain-specific deployments.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!