How do annotation algorithms reinforce or reduce bias?

Annotation

Data Processing

AI Models

Annotation algorithms sit at the heart of AI development, playing a crucial role in either amplifying or alleviating bias in datasets. Understanding their dual nature is essential for AI engineers and product managers striving for fairness and efficacy in AI models. Let’s dive into the mechanisms by which these algorithms influence bias and explore actionable strategies for mitigation.

The Importance of Addressing Bias in AI

The societal impact of biased AI models is profound. A study by MIT Media Lab found that facial recognition software is less accurate for women and people of color, underscoring the tangible consequences of bias.

For AI practitioners, ensuring the integrity of annotation processes is not just a technical challenge. It is a responsibility with real-world implications.

Mechanisms of Bias Reinforcement

Labeler Bias: Human annotators bring their own biases into the annotation process. For example, if annotators associate certain ethnic groups with specific stereotypes, this bias infiltrates the dataset and skews the training data.

Training Set Imbalance: Algorithms trained on skewed datasets replicate those biases. A speech recognition system primarily trained on male voices may fail to accurately transcribe female voices or diverse accents, leading to biased outcomes.

Ambiguous Guidelines: Vague annotation instructions result in inconsistencies. When annotators interpret unclear guidelines differently, it introduces a mix of biases that confuse AI models during training.

Strategies to Reduce Bias through Annotation

Diverse Annotation Teams: Employing annotators from varied backgrounds ensures a multitude of perspectives, minimizing the risk of a single narrative dominating the dataset.

Clear Guidelines and Comprehensive Training: Providing explicit instructions and conducting bias-awareness training empowers annotators to identify and mitigate their own biases.

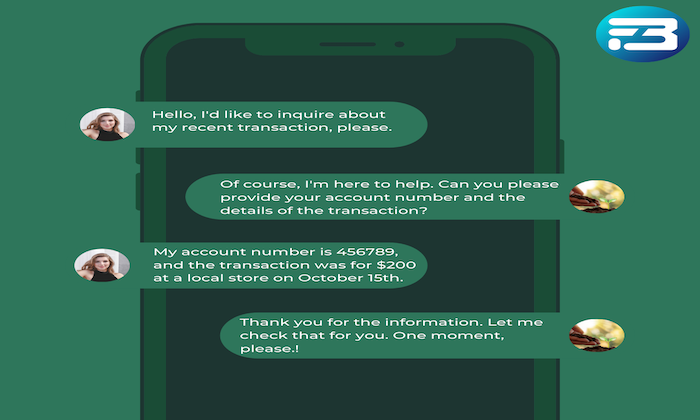

Algorithmic Monitoring and Feedback: Implement quality control systems to detect and correct bias. Platforms like FutureBeeAI’s Yugo track annotation consistency, offering insights into individual annotator approaches and enabling necessary adjustments.

Practical Takeaways for AI Practitioners

To effectively mitigate bias, AI teams should:

Invest in thorough training to heighten annotators' awareness of bias.

Develop structured annotation guidelines that emphasize clarity and inclusivity.

Regularly audit datasets to identify and rectify bias, ensuring continuous improvement.

By embracing these strategies, AI practitioners can create datasets that better reflect diverse human experiences, ultimately leading to more equitable AI systems.

Conclusion

Annotation algorithms are pivotal in shaping the fairness of AI models. Through proactive bias mitigation strategies, AI teams can ensure that their models are not only efficient but also just.

Continuous vigilance and commitment to diversity and bias reduction are crucial for developing AI that serves all communities equitably.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!