Why does TTS evaluation need to be repeated over time?

TTS

Quality Assurance

Speech AI

In AI systems, stability is temporary. Adaptation is continuous.

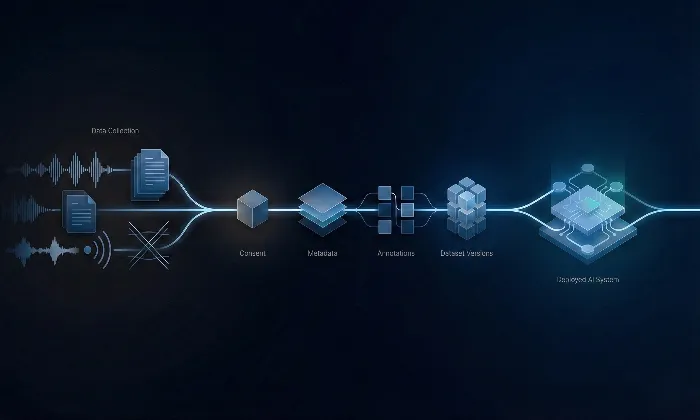

Text-to-Speech (TTS) systems operate in dynamic environments where user expectations, linguistic patterns, and competitive benchmarks evolve consistently. Continuous evaluation ensures performance does not stagnate while the external landscape advances.

Rising Standards of Naturalness

User perception of what sounds natural is not static. As new models improve expressiveness, pacing, and emotional realism, baseline expectations shift upward.

Continuous evaluation tracks whether a TTS system remains aligned with contemporary standards rather than legacy benchmarks.

Silent Regression Detection

Performance degradation is often gradual rather than abrupt.

Subtle shifts such as unnatural pauses, tonal flattening, or pronunciation inconsistencies may not trigger automated alerts. Regular structured evaluations surface these perceptual drifts before they compound into user dissatisfaction.

Linguistic Expansion and Adaptation

As systems encounter new dialects, accents, jargon, or domain-specific terminology, evaluation frameworks must validate adaptability.

Continuous testing signals when retraining or fine-tuning is necessary to maintain pronunciation precision, prosodic consistency, and contextual accuracy.

Human Perception as a Stability Anchor

Automated metrics provide numerical snapshots. They do not fully capture emotional tone, contextual appropriateness, or cultural resonance.

Periodic human evaluation, especially involving native speakers, ensures that perceptual quality evolves in parallel with technical performance.

Competitive Benchmarking Pressure

The TTS ecosystem evolves rapidly. New architectures and training approaches redefine quality thresholds.

Ongoing evaluation enables benchmarking against emerging standards, preventing stagnation and maintaining competitive relevance.

Practical Takeaway

TTS evaluation is not a milestone event. It is a lifecycle process.

Continuous validation protects against regression, adapts to linguistic evolution, and preserves experiential credibility.

At FutureBeeAI, structured continuous evaluation frameworks combine perceptual diagnostics, attribute-level monitoring, and longitudinal performance tracking to ensure TTS systems remain stable, relevant, and aligned with real-world user expectations.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!