How does task design affect contributor stress and accuracy?

Task Design

Technical Content

Productivity

In the realm of AI, task design is a critical factor that can make or break the integrity of your data collection process. At its core, task design is about structuring assignments so they are clear, realistic, and cognitively manageable for contributors. This directly affects not only data accuracy but also the mental well-being of the people doing the work.

Understanding this relationship is essential for building a sustainable, high-quality data pipeline.

The Role of Task Design in Influencing Stress and Accuracy

Effective task design goes beyond writing instructions. It requires careful consideration of contributor cognitive load, attention span, and engagement. Poorly designed tasks, those that are vague, overly complex, or time-pressured often lead to confusion and frustration. Elevated stress levels, in turn, reduce accuracy and consistency.

When contributors struggle to interpret expectations, error rates rise, rework increases, and long-term participation declines. Over time, this disrupts project continuity and undermines dataset reliability.

The Stakes of Poor Task Design

Inadequate task design has clear and measurable consequences:

Increased error rates: Stress encourages rushing and misinterpretation, directly harming data accuracy.

Contributor burnout: Repeated exposure to stressful tasks leads to fatigue, disengagement, and higher attrition.

Lower-quality insights: Data produced under pressure is often inconsistent, weakening the AI models trained on it.

These outcomes are costly not only in terms of data quality, but also in contributor trust and operational efficiency.

Key Strategies for Effective Task Design

Prioritize clarity over complexity: Instructions should be simple, explicit, and broken into smaller steps. Visual examples, edge-case explanations, and clear success criteria help contributors work confidently and accurately.

Introduce task variation: Monotony increases cognitive fatigue. Rotating task types or offering limited choice between tasks improves engagement and reduces stress, leading to more consistent performance.

Implement feedback mechanisms: Regular, constructive feedback helps contributors improve and feel supported. Knowing how their work is evaluated reduces anxiety and increases accuracy over time.

Conduct stress-response testing: Pilot tasks before full rollout. Track completion times, error patterns, and contributor questions to identify stress points early and refine task design accordingly.

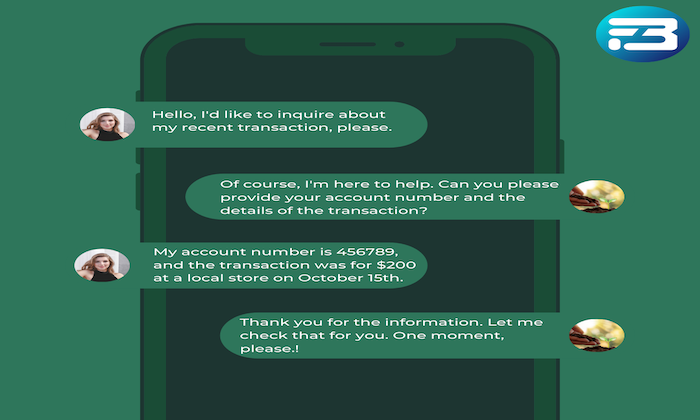

Create support systems: Accessible help channels such as chat support or community forums that allow contributors to seek clarification quickly, preventing errors caused by uncertainty.

Practical Takeaway

Contributor stress is not an abstract concern, it directly impacts data accuracy. Designing tasks that are clear, balanced, and well-supported improves both contributor well-being and dataset quality. Thoughtful task design creates conditions where contributors can focus, perform consistently, and stay engaged.

At FutureBeeAI, we treat task design as an ethical and operational priority. By reducing stress at the task level, we protect contributor dignity while ensuring the high-quality data required for responsible AI development.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!