How do humans evaluate speaker similarity reliably?

Speaker Verification

Audio Analysis

Speech AI

Assessing speaker similarity goes beyond matching vocal traits; it is about consistently capturing the unique essence of a voice across all interactions. In text-to-speech (TTS) applications, this consistency is crucial. Imagine a news anchor who sounds different every day. It disrupts trust and engagement, much like inconsistent TTS voices can disrupt user experience.

The Importance of Speaker Consistency in TTS

In TTS systems, speaker identity consistency is pivotal. Users expect a seamless and familiar interaction, similar to conversing with someone they recognize. Inconsistent voice delivery can feel unstable and erode confidence in the system.

Speaker similarity is not merely a technical benchmark. It is a perceptual contract. Once users associate a voice with a brand, assistant, or persona, any deviation becomes noticeable. Evaluation frameworks must therefore protect that continuity across prompts, sessions, and updates.

Core Attributes for Evaluating Speaker Similarity

Speaker similarity is multi-dimensional. Listeners subconsciously evaluate several attributes when judging whether two samples represent the same identity.

Vocal timbre: The distinctive tonal texture of the voice. Even if pitch and words match, timbre differentiates identities.

Prosodic patterning: Rhythm, stress placement, and intonation contours. Identity is partly encoded in how speech flows, not just what is said.

Pronunciation stability: Consistent articulation patterns reinforce speaker identity. Variation in pronunciation can weaken perceived similarity.

Expressive range: Emotional delivery and energy levels should remain aligned with the intended persona. Sudden shifts can break identity continuity.

Long-form consistency: Voice characteristics must remain stable across extended passages, not just short clips. Collapsing these into a single similarity score hides identity drift. Attribute-level inspection is essential.

Effective Methodologies for Speaker Evaluation

Paired A/B Comparison: Evaluators compare a reference voice with a generated sample and select which better matches the target identity. This reduces scale bias and supports direct similarity judgments.

Attribute-Wise Structured Tasks: Listeners evaluate timbre alignment, prosodic consistency, and expressive stability separately. This diagnostic approach identifies which identity dimension may be drifting.

Native Evaluator Involvement: Native speakers detect subtle pronunciation and prosodic cues that define speaker authenticity within a language. Their perception anchors realism.

Reference-Based Calibration: Evaluators should regularly hear the original reference samples to prevent perceptual adaptation and internal drift.

Avoiding Common Evaluation Missteps

Over-reliance on aggregated metrics such as Mean Opinion Score (MOS) obscures identity-specific nuances. A high MOS does not guarantee speaker similarity. A voice can sound pleasant yet fail to match the intended persona.

Evaluator fatigue is another risk. Identity differences are often subtle. Long evaluation sessions reduce perceptual sensitivity. Rotating evaluators, embedding calibration samples, and scheduling breaks preserve reliability.

Ignoring long-form samples is also problematic. Identity drift frequently appears across extended passages rather than isolated sentences.

Practical Takeaway

Speaker similarity evaluation requires structured perceptual testing, not surface-level approval. Attribute-wise diagnostics, comparative listening tasks, and native evaluator insights ensure identity continuity remains intact across iterations.

At FutureBeeAI, structured evaluation methodologies and comprehensive tooling support stable speaker identity across TTS deployments. Our platform provides comprehensive tools designed to maintain voice consistency and preserve user trust at scale.

FAQs

Q. What are the best tools for evaluating speaker similarity?

A. Effective tools support paired A/B comparisons, attribute-wise structured evaluation, and reference-based calibration. Platforms that enable structured perceptual diagnostics deliver more reliable identity assessments than single-score systems.

Q. How frequently should speaker similarity be assessed?

A. Speaker similarity should be evaluated before major releases and after model updates, data refreshes, or fine-tuning cycles. Periodic re-evaluation also helps detect gradual identity drift over time.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

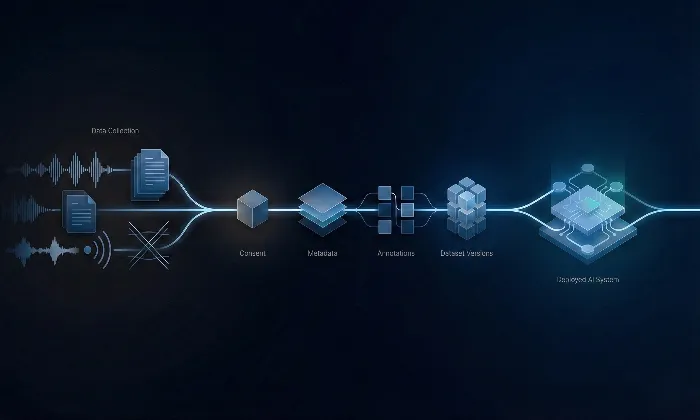

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!