What Are the Challenges in Labeling Noisy Call Center Audio?

Noisy Audio

Labeling Challenges

Annotation Accuracy

Accurate annotation of call center audio is essential for training speech-based AI systems, especially in regulated or high-volume domains like finance, telecom, and healthcare. However, background interference in recordings often compromises annotation quality. At FutureBeeAI, we approach this challenge through a structured, quality-first strategy to ensure reliable, production-grade datasets.

Noise Types in Call Center Recordings

Background Noise

Ambient disruptions such as keyboard clicks, room chatter, or traffic interfere with the clarity of spoken interactions.

Overlapping Speech

Simultaneous dialogue between speakers, particularly in escalation scenarios, makes accurate labeling more complex.

Audio Artifacts

Issues like microphone distortion, echo, or packet loss introduce irregularities that obscure phonetic and semantic cues.

Labeling Challenges in Noisy Environments

Reduced Transcription Accuracy

Noise increases the likelihood of transcription errors, which can degrade dataset quality and hinder downstream model performance.

Ambiguity in Intent Annotation

Unclear speech makes it difficult to label user intent accurately, particularly when distinguishing between related categories like complaints and inquiries.

Inconsistent Speaker Identification

Noise interferes with speaker diarization, making it harder to attribute speech to the correct participant, especially in multi-agent or dual-channel settings.

Strategies for Ensuring Annotation Quality

Preprocessing Before Annotation

We apply denoising filters, automatic gain control, and voice activity detection to enhance audio clarity before it reaches annotators.

Dual Annotation with Integrated QA

Each sample is reviewed by multiple annotators. Disagreements are resolved using YUGO’s layered quality assurance workflow, ensuring consistent and verified outputs.

Contextual Guidelines for Annotators

Instructions are customized based on domain-specific goals, such as financial disclosures or telecom troubleshooting, so annotators can label with precision even in the presence of noise.

Support for Custom Data Collection

FutureBeeAI assists in capturing higher-quality audio where feasible, offering controlled environment recording and hardware calibration for custom projects.

Conclusion

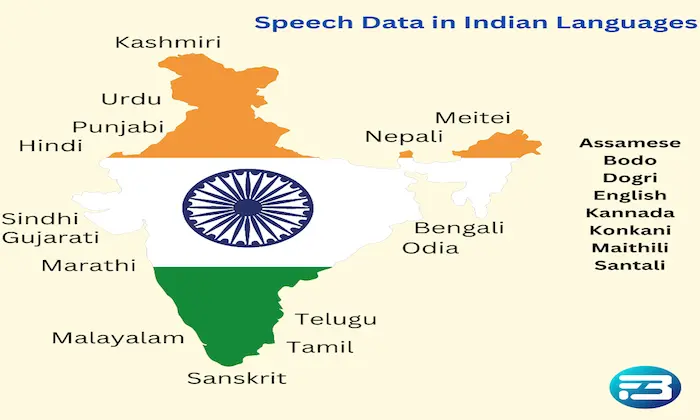

Labeling noisy call center audio dataset is a complex task that directly affects model learning and operational accuracy. At FutureBeeAI, we combine preprocessing, structured workflows, and platform-led QA to turn acoustically compromised data into valuable training assets. With multilingual capabilities, domain-aware labeling, and rigorous quality layers, we ensure that your voice AI models are trained on data that mirrors real-world complexity, without compromising on precision.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!