How do you align model evaluation strategy with long-term goals?

Model Evaluation

Strategic Planning

AI Models

Model evaluation is not a procedural checkpoint. It is a strategic control system.

Without alignment between evaluation design and long-term business objectives, AI models may optimize for technical benchmarks while underperforming in real-world impact. Evaluation must function as a compass that guides deployment, iteration, and investment decisions.

Why Strategic Alignment Is Critical

Evaluation frameworks determine what success means. If success metrics do not reflect user outcomes or operational risk tolerance, models may pass validation while failing in practice.

For example, a TTS model may achieve strong MOS scores yet still sound unnatural in contextual usage. Alignment ensures that naturalness, trust, and domain fit are treated as primary outcomes rather than secondary considerations.

Core Alignment Drivers

1. Outcome Relevance: Evaluation metrics must map directly to user-facing value. Technical accuracy alone is insufficient if perceptual authenticity, clarity, or contextual tone suffer.

2. Decision-Driven Evaluation: Evaluation results should trigger defined actions such as ship, refine, retrain, or rollback. If metrics do not influence decisions, they lack strategic weight.

3. Risk Visibility: The most dangerous model is not one that fails visibly, but one that creates false confidence. Continuous evaluation reduces the probability of unnoticed performance gaps entering production.

Strategic Practices for Long-Term Alignment

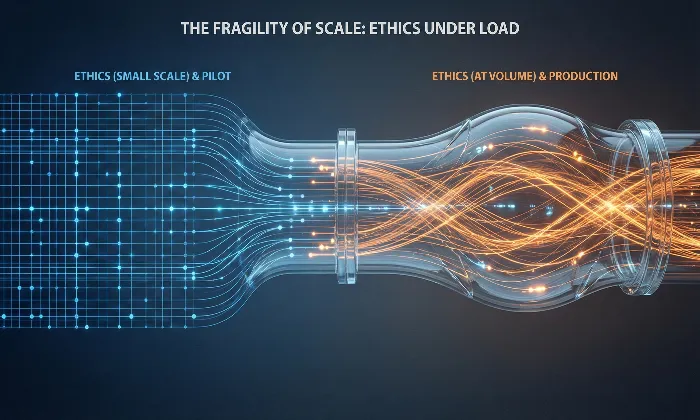

Stage-Based Evaluation Design: Early prototypes may rely on coarse screening metrics for rapid iteration. Pre-production models require attribute-level diagnostics. Production-ready systems demand calibrated thresholds and regression safeguards.

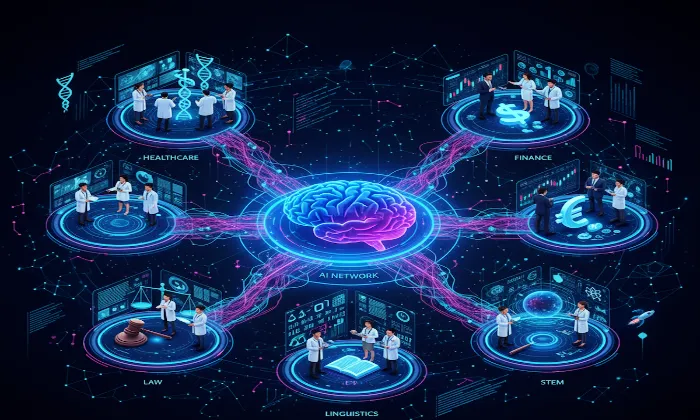

Domain and Native Expert Inclusion: Native evaluators and subject matter experts identify contextual misalignment that automated metrics overlook. This is particularly critical for culturally sensitive or high-stakes deployments.

Disagreement Analysis: Evaluator divergence should be examined, not dismissed. Disagreement often signals ambiguous task design, subgroup performance gaps, or perceptual instability.

Continuous Monitoring Architecture: Integrate evaluation into the lifecycle rather than treating it as a milestone event. Longitudinal tracking protects against drift and evolving expectation gaps.

Practical Takeaway

Strategic evaluation alignment ensures that AI systems optimize for business impact, not just technical performance.

Define success in terms of user outcomes.

Link evaluation outputs to operational decisions.

Monitor continuously to prevent false confidence.

At FutureBeeAI, evaluation frameworks are designed to connect attribute-level diagnostics with deployment governance, ensuring models remain aligned with both user expectations and long-term strategic objectives.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!