How AI Extracts Sentiment from Call Center Conversations?

AI Sentiment Analysis

Call Center Conversations

Speech Data

In today’s customer service landscape, understanding not just what customers say but how they say it is critical. Whether identifying frustration, urgency, satisfaction, or confusion, sentiment analysis helps organizations gauge customer emotions, improve service quality, and take action in real time.

AI-powered sentiment analysis in call center conversations depends heavily on domain-specific speech datasets that combine audio recordings, transcriptions, and acoustic annotations. At FutureBeeAI, we design high-quality, sentiment-labeled call center datasets that empower AI models to read between the lines, literally and emotionally.

Sentiment analysis in the context of speech involves identifying and classifying the emotional tone behind spoken content. It enables systems to understand:

- Customer mood and intent

- Agent empathy and response patterns

- Escalation triggers or dissatisfaction signals

- Overall emotional trajectory of a conversation

Unlike text-only sentiment analysis, speech sentiment extraction incorporates both linguistic and acoustic dimensions, requiring datasets that support both perspectives.

A sentiment-rich call center dataset includes several layers of annotation:

- Transcript-Level Sentiment Labels: Assigning positive, negative, or neutral sentiment to entire call turns or utterances.

- Segment-Level Emotion Tags: Captures shifts in emotion mid-conversation (for example, from confused to angry to satisfied).

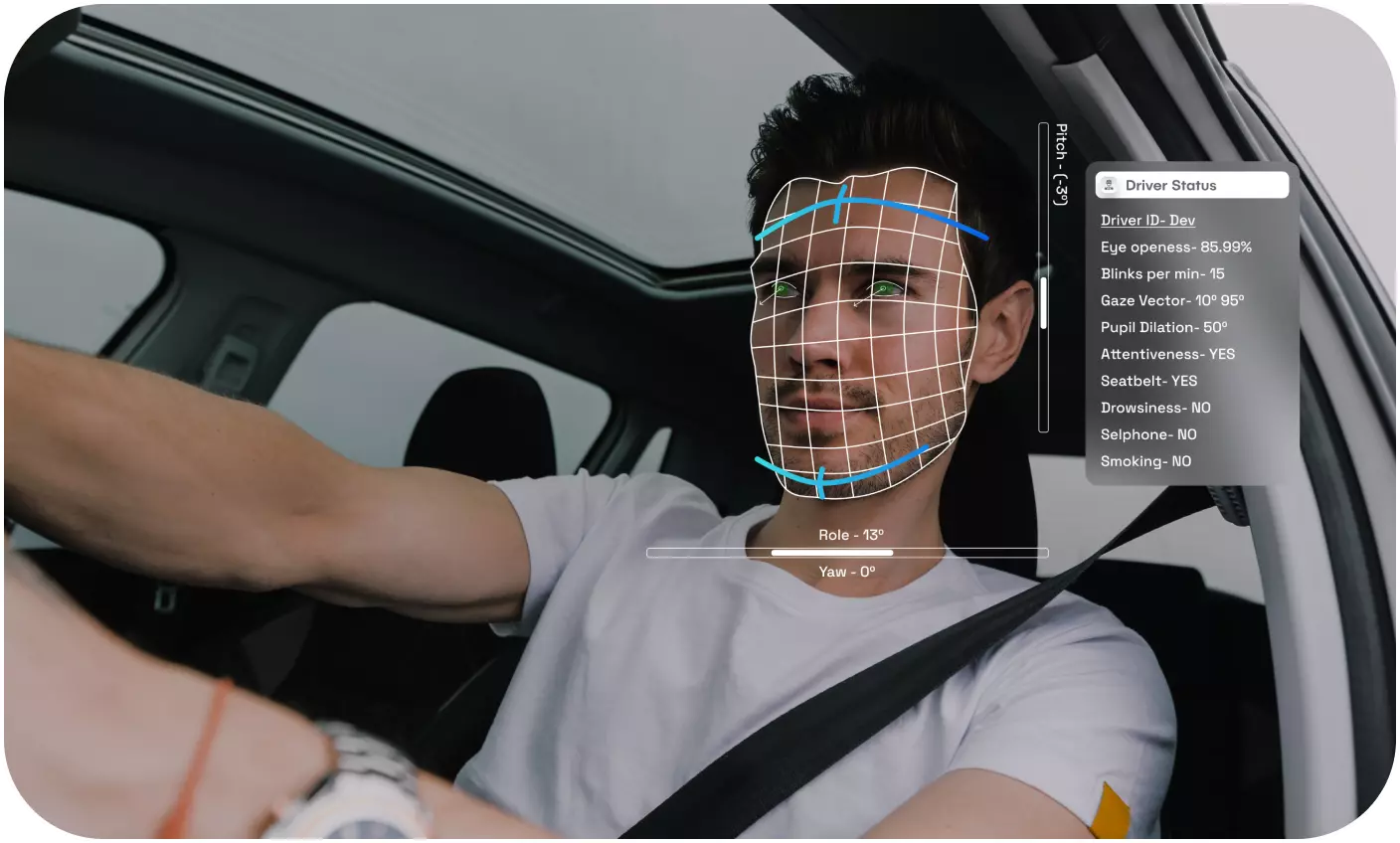

- Acoustic Feature Annotation: Includes speech rate, pitch, tone, volume, and pauses, essential signals for non-verbal emotional cues.

- Speaker Diarization and Role Tagging: Differentiates sentiment from the customer versus the agent, enabling role-based analysis.

- Contextual Metadata: Call topic, duration, resolution status, and agent profile help models learn sentiment patterns in specific business scenarios.

At FutureBeeAI, we provide fully anonymized, multilingual, and domain-specific sentiment datasets tailored to use cases in telecom, banking, healthcare, and more.

How AI Models Interpret Sentiment in Speech

AI models extract sentiment using a combination of natural language processing and speech signal processing:

Linguistic Sentiment Modeling

- Analyzes the semantic content of spoken words

- Identifies sentiment-rich phrases like “this is frustrating,” “thank you so much,” or “you’re not helping”

Acoustic Sentiment Modeling

- Examines prosodic features like pitch, tempo, intensity, and inflection

- Detects emotional states even in cases of polite but annoyed speech (such as a calm voice with passive-aggressive tone)

Multimodal Fusion

- Combines text and audio features for more accurate sentiment classification

- Useful in edge cases like sarcasm, where text and tone conflict

These models require training on large volumes of annotated speech data, which is precisely what FutureBeeAI delivers at scale and with domain alignment.

Applications of Sentiment Analysis in Call Centers

- Real-Time Escalation: Trigger supervisor intervention when a conversation trends negative

- Agent Coaching: Analyze empathy and tone to improve performance

- Customer Satisfaction Prediction: Forecast Net Promoter Scores based on sentiment trajectory

- Product Feedback Extraction: Understand customer opinions from unsolicited feedback in calls

Conclusion

Speech sentiment analysis is no longer optional, it is a strategic asset. When AI understands emotions, it enables proactive service, strengthens customer loyalty, and empowers human agents with emotional intelligence insights. FutureBeeAI’s sentiment-annotated call center datasets offer the foundation you need to train, deploy, and optimize sentiment-aware AI systems that understand more than just words.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!