What makes a model evaluation framework reusable?

Model Evaluation

AI Development

Machine Learning

A reusable model evaluation framework is not just a checklist of metrics. It is a structured system that remains stable across projects while adapting to changing models, datasets, and deployment contexts.

Reusability ensures that evaluation processes evolve without requiring teams to rebuild foundations each time a new model is introduced.

Why Reusability Is Operationally Critical

Reusable frameworks improve efficiency, consistency, and strategic alignment across teams and product cycles.

They reduce redundancy, enable cross-model comparison, and support scalable evaluation as portfolios expand. When team members transition, structured frameworks also preserve institutional knowledge.

Core Design Principles for Reusable Frameworks

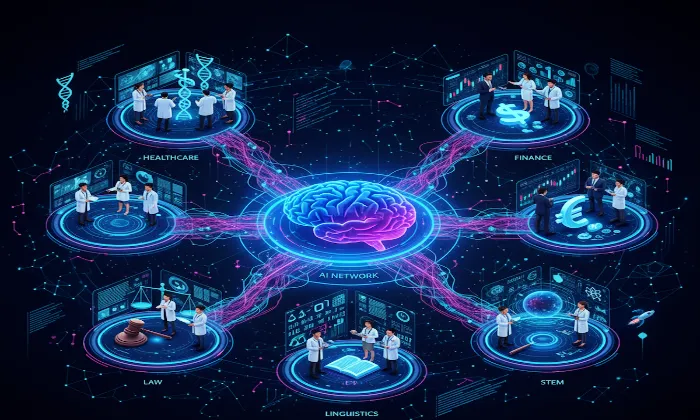

1. Modular Architecture: Evaluation components should function as independent modules. Metrics, task types, evaluator pools, and reporting layers must be replaceable without disrupting the entire system. For example, a core evaluation layer for TTS models can remain constant while domain-specific attributes are adjusted.

2. Context-Aware Metric Mapping: Metrics must be clearly defined and mapped to use cases. A customer support TTS deployment may prioritize intelligibility and tonal clarity, while audiobook narration may emphasize expressiveness and rhythm stability.

3. Embedded Feedback Loops: Frameworks should incorporate structured mechanisms for refinement. Evaluation outcomes must inform metric calibration, task design adjustments, and rubric evolution over time.

4. Evaluator Calibration and Training: Reusability depends on evaluator consistency. Ongoing calibration sessions and structured onboarding ensure interpretive alignment across teams and iterations.

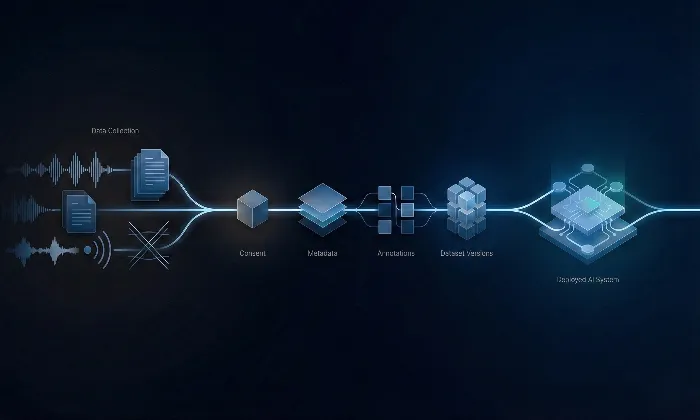

5. Auditability and Traceability: Every evaluation cycle should log evaluator identity, task configuration, model version, timestamp, and contextual metadata. This ensures reproducibility and supports root cause analysis when discrepancies arise.

Structural Benefits of Reusable Frameworks

Cross-Project Consistency: Comparable outputs across multiple models and product lines.

Scalable Governance: Easier adaptation as evaluation volume increases.

Reduced Onboarding Friction: Clear documentation accelerates team transitions.

Longitudinal Analysis Capability: Consistent structure enables performance tracking over time.

Practical Takeaway

A reusable evaluation framework is a governance mechanism, not just an operational tool.

It standardizes decision-making, preserves methodological integrity, and enables continuous improvement without structural resets.

At FutureBeeAI, evaluation systems are designed to balance modular flexibility with methodological rigor, ensuring AI models remain comparable, auditable, and aligned with real-world performance demands.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!