How does multimodal dataset creation amplify privacy complexity?

Data Privacy

Multimodal

AI Models

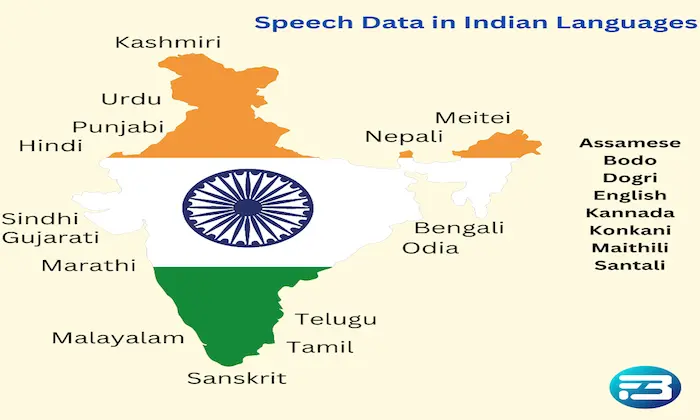

Multimodal datasets hold the promise of richer insights and superior model performance. Yet, they introduce significant privacy complexities due to the varied nature of data types involved are audio, text, images and more,each with its own privacy implications and regulatory requirements. At FutureBeeAI, we understand that combining these modalities multiplies the challenge of ensuring compliance and safeguarding user privacy.

Why Privacy Complexity Matters

In today’s data-driven world, where breaches and privacy violations can have serious consequences, understanding privacy intricacies in multimodal dataset creation is critical for AI teams. These datasets often involve sensitive information from diverse sources, making strong privacy practices essential.

Diverse Data Types: Each modality requires different handling methods, consent structures, and legal considerations, which complicates compliance.

Cross-Modal Risks: Combining data types increases the risk of re-identification, where anonymized data can be linked back to individuals when correlated across modalities.

Regulatory Landscape: A global patchwork of privacy laws such as GDPR and CCPA creates added complexity when managing multimodal datasets at scale.

Key Challenges in Multimodal Privacy

Consent Complexity: Contributors must clearly understand how their data will be used across multiple formats. For example, someone may consent to audio data collection without fully realizing how that data could be analyzed alongside visual inputs.

Data Minimization: Multimodal projects often encourage over-collection. Enforcing strict data minimization is essential to reduce privacy risks and remain compliant.

Metadata Management: Each modality generates its own metadata. Without consistent tracking, data provenance and processing histories become unclear, complicating audits and compliance verification.

Behavioral Drift: Contributor behavior and preferences can change over time, introducing drift that affects both privacy guarantees and dataset reliability. Continuous monitoring of contributor session logs is required to manage this risk.

Interoperability Issues: Different modalities may rely on different encryption and access standards. Ensuring uniform privacy controls across all data types is essential to avoid weak points.

FutureBeeAI’s Approach to Privacy in Multimodal Datasets

At FutureBeeAI, we manage these complexities through structured, ethics-first practices:

Standardized Consent Processes: Contributors receive clear, modality-specific explanations with explicit opt-in mechanisms for each data type.

Strong Data Governance: Our systems enforce metadata consistency and lineage tracking across multimodal datasets, supporting transparency and auditability.

Regular Privacy Audits: We continuously review datasets against global regulations and internal ethical standards.

Cross-Functional Collaboration: Data engineers, legal experts, and product teams work together to design cohesive privacy strategies across modalities.

By addressing multimodal privacy challenges proactively, FutureBeeAI enables innovation without compromising trust.

Practical Takeaway

FutureBeeAI’s commitment to ethical AI data collection ensures that every multimodal dataset carries not just accuracy and performance value, but also ethics, fairness, and accountability. We collect only what is necessary, respect contributors as collaborators, and maintain dignity and diversity across all data types. This approach ensures global compliance while building AI systems that people can trust.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!