Why do models fail in unseen environments?

Machine Learning

AI Challenges

Predictive Models

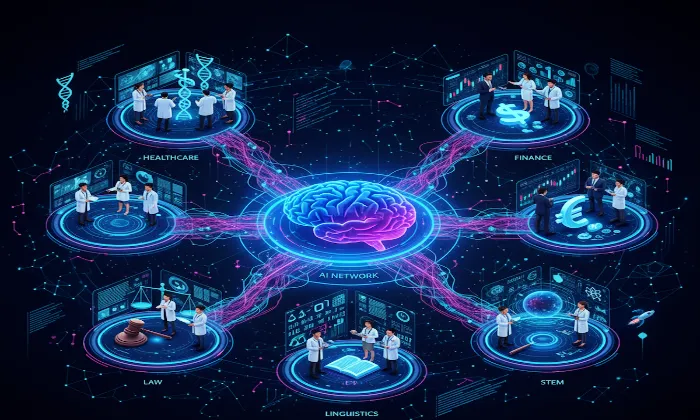

Why do AI models often stumble when deployed in environments they were never trained on? This challenge surfaces repeatedly across high-stakes domains such as finance, healthcare, and security. In most cases, the root cause is poor generalization, driven by limited data diversity and insufficient exposure to real-world variability during training.

Understanding the Core Challenge

AI models typically fail in unfamiliar environments due to overfitting and narrow training distributions. Overfitting occurs when a model learns patterns that are overly specific to its training data rather than robust, transferable representations.

For example, a facial recognition model trained exclusively on well-lit, frontal images may perform impressively during validation but struggle in low-light settings or non-standard camera angles. Without exposure to a wide range of conditions, models lack the context needed to make reliable decisions outside controlled environments.

Why Dataset Diversity Improves Model Robustness

Diverse datasets are essential for building resilient AI systems. Exposure to variation during training allows models to learn invariant features rather than brittle correlations.

In facial recognition systems, this includes variation across:

Lighting conditions

Camera angles and distances

Occlusions such as glasses, masks, or headwear

Background and environmental context

A model trained only on bright, studio-quality images is far more likely to fail when deployed in real-world settings like parking lots, hospitals, or outdoor checkpoints. Diverse datasets help close this gap by preparing models for the conditions they will actually encounter.

Common Pitfalls in Model Training

Several recurring mistakes contribute to poor real-world performance:

Ignoring deployment environments: Models are often trained without considering where and how they will be used. A system optimized for indoor environments may degrade rapidly outdoors due to lighting shifts and background noise.

Static training datasets: Training once and freezing the dataset assumes the world does not change. In reality, environments, devices, and user behavior evolve continuously.

Narrow evaluation practices: Testing models only on data that resembles the training set creates false confidence. Real robustness only emerges when models are evaluated under unfamiliar conditions.

Strategies for Improving Model Resilience

To reduce failure in unseen environments, teams should adopt the following practices:

Multi-condition data collection: Proactively collect data across different lighting, environments, and usage contexts. FutureBeeAI’s facial datasets are structured to include such variability, supporting real-world robustness.

Behavioral and environmental drift monitoring: Track how model performance changes as new data enters production. Tools like FutureBeeAI’s contributor session logs help identify shifts in capture behavior and environment that may impact accuracy.

Iterative retraining and validation: Periodically retrain models with updated data that reflects current conditions. Continuous learning is essential for systems operating in dynamic environments.

Practical Takeaway

AI models fail in unseen environments not because the algorithms are weak, but because the training data is incomplete. Robust performance depends on datasets that reflect the full range of conditions models will face after deployment.

By investing in diverse, representative data and disciplined AI data collection, teams can significantly reduce real-world failure rates. The guiding question should always be: What conditions could this model realistically encounter, and have we trained for them?

When that question is answered honestly and systematically, model reliability follows.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!