How does dataset diversity affect the realism of cloned voices?

Voice Cloning

Audio Technology

Speech AI

Dataset diversity is a cornerstone in the realm of voice cloning, directly impacting the realism and effectiveness of synthesized voices. By incorporating a wide array of voices, accents, and emotional tones, AI systems can produce outputs that resonate more naturally and authentically with users. Let's dive into why this diversity matters, how it enhances voice synthesis, and how FutureBeeAI supports achieving this diversity.

Why Dataset Diversity Matters in Voice Cloning

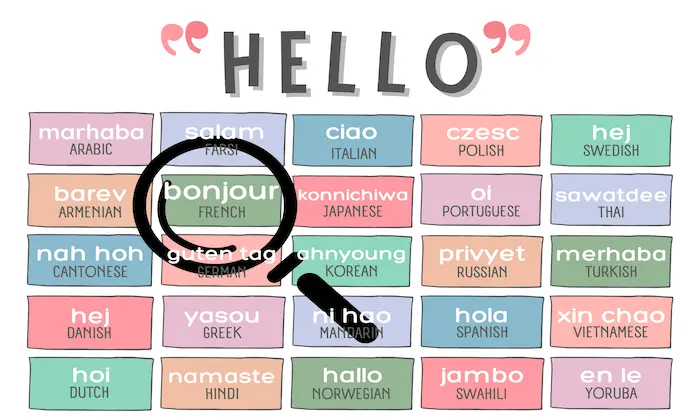

Voice synthesis technology, which includes applications like virtual assistants and gaming characters, relies heavily on diverse training datasets. These datasets encompass various demographic factors—such as age, gender, and regional accents—ensuring that voice models can capture the intricate nuances of human speech.

- Rich Representation of Speech: A diverse dataset captures a spectrum of speech patterns and emotional expressions, which are pivotal for creating voices that sound natural and relatable. For example, without a variety of accents, a voice synthesis model might fail to accurately replicate regional pronunciations, reducing the authenticity of the output.

- Enhanced User Engagement: Realism in synthesized voices is crucial for maintaining user engagement. When voices sound genuine and emotive, users are more likely to interact positively, whether it's through a gaming character or a customer service bot. Diverse speech datasets help achieve this by providing the necessary variation in speech.

How Dataset Diversity Enhances Voice Cloning

Voice cloning technologies utilize deep learning models, such as neural network models, to analyze and replicate the unique characteristics of individual voices. Here's how diverse datasets play a vital role:

- Variety in Speaker Demographics: Including a broad range of speakers ensures that models learn from different pitch, tone, and timing patterns. For instance, FutureBeeAI’s datasets typically require 30–40 hours of studio-grade recording per speaker, enabling expressive and stable voice replication.

- Quality Recording Standards: High-quality recordings are essential. FutureBeeAI ensures recordings are made in professional studio environments, adhering to strict standards like the WAV format and 48kHz sampling rate. This attention to detail eliminates background noise and other distortions, which are critical for accurate voice cloning.

- Emotional and Prosodic Variability: Incorporating various emotional tones into the dataset allows models to generate expressive speech. This is crucial for applications requiring nuanced interactions, such as virtual assistants or accessibility solutions for the speech-impaired.

Real-World Applications and Impact

The implications of dataset diversity extend across numerous applications. In entertainment, for example, voice synthesis technologies benefit from diverse datasets that enable the creation of unique, compelling character voices. Similarly, in accessibility, realistic synthesized voices can significantly improve communication for individuals with speech impairments.

FutureBeeAI plays a pivotal role by providing ethically sourced and structured voice data, acting as a secure bridge between AI teams and verified voice contributors. This ensures that the data used in voice cloning projects is both high-quality and diverse, supporting the development of more effective and engaging voice applications.

Key Considerations for Teams

When building datasets for voice cloning, teams must make strategic decisions regarding:

- Speaker Selection: Choose a balanced mix of demographics. FutureBeeAI typically includes a minimum of two speakers per language and expands based on project needs.

- Recording Environment: Ensure all voice data is collected in professional studios to maintain consistency and quality.

- Inclusion of Emotional Expressions: Consider the need for emotional diversity to enhance the model’s ability to generate expressive speech outputs.

Conclusion

Dataset diversity is integral to the success of voice cloning technologies, influencing the realism and user acceptance of synthesized voices. By prioritizing a wide representation of speakers and speech styles, AI teams can develop voice applications that are not only technically robust but also resonate with users on an emotional level. FutureBeeAI stands ready to support these endeavors with its comprehensive speech data collection and annotation services, ensuring that your voice synthesis projects are built on a foundation of rich, diverse data.

Smart FAQs

Q. How does FutureBeeAI ensure dataset diversity?

FutureBeeAI assembles datasets with a broad range of speakers across different languages, accents, and emotional expressions. This approach guarantees that voice models trained on these datasets can produce natural and varied speech outputs.

Q. What makes studio-grade recordings crucial for voice cloning?

Studio-grade recordings eliminate background noise and ensure clarity, which is essential for training precise and realistic voice models. FutureBeeAI's commitment to high-quality recording standards helps achieve this level of detail and accuracy.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!