How does camera distance affect face verification?

Face Verification

Security

Biometrics

Camera distance plays a critical yet often underestimated role in the accuracy of face verification systems. The physical distance between the camera and the subject directly affects image quality, feature visibility, and ultimately the reliability of facial recognition algorithms used in real-world applications.

Why Camera Distance Matters

The relationship between camera distance and verification accuracy is non-linear and impacts multiple technical factors simultaneously:

Image Resolution: Shorter distances typically produce higher-resolution facial regions, preserving fine-grained details such as contours and texture. These details are essential for precise face matching and identity verification.

Facial Feature Clarity and Geometry: As distance increases, facial features become flatter and less distinct. This loss of depth and definition makes it harder for algorithms to differentiate between similar faces, increasing the risk of false acceptances or rejections.

Practical Implications of Camera Distance on Verification Performance

1. Optimal Capture Distances: For frontal face images, a distance of approximately 1–2 meters usually offers the best balance between facial detail and minimal perspective distortion. Beyond this range, key features may degrade enough to negatively impact verification accuracy.

2. Framing Variability: Framing significantly influences usable facial information. Close-up or shoulder-up captures retain more discriminative features compared to full-body shots taken from afar. Consistent framing improves model learning, while controlled variability improves robustness.

3. Lighting and Occlusion Sensitivity: Greater distances amplify challenges related to poor lighting and occlusions. Faces captured from afar in low-light conditions often suffer from noise and reduced contrast. Training with datasets such as an Occlusion Image Dataset helps models handle these compounded challenges.

4. Real-World Deployment Scenarios: Use cases like KYC, access control, and surveillance involve variable capture distances. Systems must be trained on distance-diverse data to maintain consistent performance, as verification accuracy often fluctuates significantly with distance changes.

Optimizing Face Verification Systems

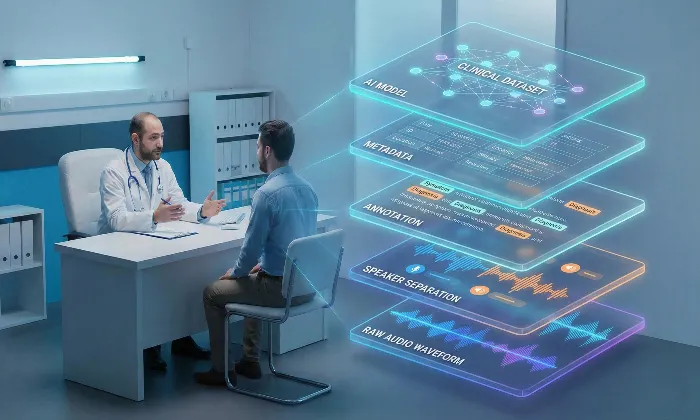

Effective face verification systems account for camera distance at the data collection stage, not as an afterthought. For example, FutureBeeAI’s facial datasets intentionally include captures ranging from close-up facial shots to full-body frames.

By collecting data across multiple distances, angles, and lighting conditions, teams can train models that generalize well across operational environments. The goal is not just higher accuracy in ideal conditions, but reliable performance in realistic, uncontrolled scenarios.

Camera distance is not a minor operational detail, it is a foundational variable that directly shapes face verification performance. Designing datasets with intentional distance diversity is essential for building reliable, real-world facial recognition systems.

FAQs

Q. How can I determine the best camera distance for my application?

A. The optimal distance depends on the use case. Conduct controlled testing across multiple distances in real-world conditions to identify the balance between facial detail, framing, and environmental context that best suits your system.

Q. What should I consider when capturing facial data for training?

A. Ensure diversity in capture distances, angles, and lighting conditions. A dataset that includes this variability will significantly improve generalization and verification accuracy. Using resources like a Multi Year Facial Image Dataset can also help models adapt to long-term facial changes over time.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!