Why do generic TTS evaluations fail in enterprise use cases?

TTS

Enterprise

Speech AI

In the dynamic world of enterprise applications, evaluating Text-to-Speech systems requires more than surface-level metrics. A system might perform well in a controlled test environment yet struggle when deployed across diverse enterprise contexts. Similarly, generic TTS evaluations often fall short because they lack contextual alignment, over-rely on generalized metrics, and insufficiently account for user perception.

The Core Challenge: Context Determines Success

Generic evaluations typically prioritize broad metrics such as Mean Opinion Score or simple A/B testing. While these provide a baseline signal, they rarely capture enterprise-specific demands. In enterprise environments, TTS systems must do more than sound clear. They must align with brand voice, convey complex information accurately, and adapt to varied operational scenarios.

For example, a customer service TTS solution must communicate empathy and clarity to build trust. In contrast, an internal analytics narration tool may prioritize speed and precision over emotional tone. When evaluation fails to reflect these distinctions, it risks approving systems that perform well in isolation but underdeliver in practice.

Key Elements for Effective Enterprise TTS Evaluation

Use-Case Alignment: Evaluation prompts must mirror real operational scenarios. Testing should reflect actual enterprise workflows, including domain-specific terminology and realistic conversational structures.

Attribute-Level Feedback: Evaluations should break down performance into attributes such as naturalness, prosody, pronunciation accuracy, emotional appropriateness, and clarity. Aggregate scores often mask weaknesses in individual dimensions.

Diverse Evaluator Pools: Native speakers and domain experts are essential for identifying pronunciation errors, contextual mismatches, and tone inconsistencies. Their insights help detect issues that generic panels may overlook.

Continuous Monitoring and Adaptation: Enterprise deployments require ongoing reassessment. Silent regressions may occur after updates, data shifts, or expanded use cases. Regular structured evaluations ensure consistent quality over time.

Human Perception as Ground Truth: Metrics provide guidance, but enterprise success ultimately depends on how users perceive the voice. Human judgment must validate whether the system aligns with business objectives and audience expectations.

Practical Takeaway

Generic TTS evaluations often fail in enterprise environments because they overlook contextual requirements and perceptual subtleties. Enterprise-grade assessment demands use-case alignment, attribute-level diagnostics, structured evaluator selection, and continuous monitoring.

At FutureBeeAI, we design evaluation frameworks tailored to enterprise realities. Our structured methodologies integrate contextual prompts, attribute-wise analysis, and post-deployment oversight to ensure your TTS systems remain reliable and aligned with business goals.

If you are looking to strengthen your enterprise TTS evaluation strategy, connect with our team to explore customized solutions that enhance performance and user experience.

What Else Do People Ask?

Related AI Articles

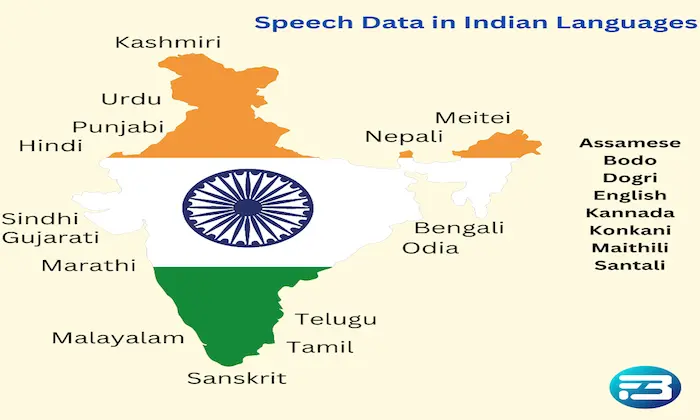

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!