What ethical risks exist in underrepresented-locale data collection?

Data Ethics

Global Research

AI Models

Collecting data from underrepresented locales involves navigating a complex landscape with significant ethical considerations. These areas, often overlooked in mainstream AI data collection, encompass diverse cultural, social, and economic backgrounds. Ensuring ethical integrity in these settings is crucial for building AI systems that are fair, transparent, and beneficial to all communities.

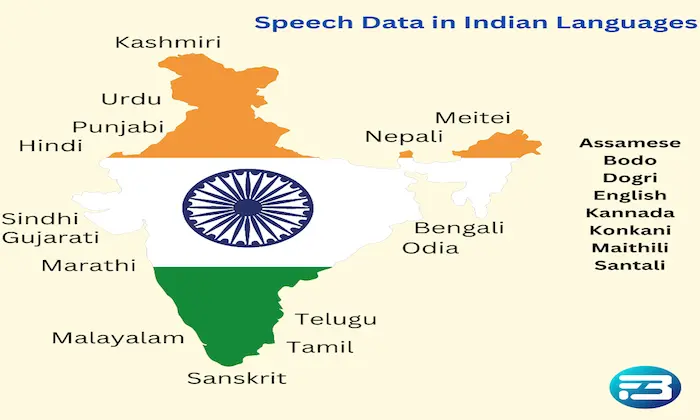

Defining Underrepresented Locales

Underrepresented locales are regions or communities that have historically been marginalized or overlooked in data-driven decision-making processes. This can include remote rural areas, indigenous communities, or urban regions lacking digital infrastructure. The absence of data from these areas can lead to AI models that do not adequately reflect their unique characteristics, perpetuating biases and disparities.

Key Ethical Risks in Underrepresented Locale Data Collection

Informed Consent: A critical ethical concern is obtaining genuine informed consent. People in these communities may not have access to comprehensive information about data usage, raising the risk of exploitation. It is vital to communicate clearly and ensure that participants fully understand how their data will be used and what their rights are.

Representation and Bias: The lack of diverse data from underrepresented locales can lead to biased AI systems. When data does not capture the full diversity of a community, the resulting AI models may reinforce stereotypes and fail to meet the needs of these populations. Ensuring diverse data representation is essential for creating equitable AI solutions.

Exploitation and Power Dynamics: There is a risk of exploiting these communities, where they might be seen merely as data sources rather than partners. This imbalance can result in communities not benefiting from the data they provide, undermining trust and collaboration.

Why Ethical Data Collection Matters

Ethical data collection is not just about compliance; it is about fostering trust and ensuring that AI systems serve all communities fairly. When ethical risks are ignored, it can lead to AI systems that perpetuate social injustices and erode public trust. This can have long-term consequences, including reputational damage for organizations and resistance to future AI initiatives.

Navigating Ethical Dilemmas

To manage these ethical challenges effectively, we recommend the following strategies:

Engage with Local Communities: Build partnerships with local leaders and organizations to understand the cultural and social nuances of data collection. This helps ensure that the process is respectful and aligned with community values.

Implement Transparent Processes: Clearly communicate the purpose, scope, and potential uses of the data to participants. Providing avenues for feedback and ensuring that participants feel valued is crucial for maintaining trust.

Focus on Diversity in Data: Actively seek diverse voices within underrepresented locales through targeted outreach. This ensures that the data collected reflects the community's complexities and contributes to more equitable AI systems.

Establish Ethical Guidelines: Develop guidelines that prioritize participants' rights and welfare, including obtaining explicit consent and allowing data withdrawal at any time. Regular audits can help maintain these standards.

Building Trust Through Ethical Data Practices

At FutureBeeAI, we align our practices with ethical pillars such as transparency, fairness, and accountability, ensuring that every data collection effort respects the dignity and diversity of contributors. By doing so, we not only enhance the quality of AI systems but also promote greater equity and trust in technology. Our commitment to ethical data collection means engaging communities as partners, not just data sources, and ensuring that the benefits of AI are shared equitably.

FAQs

Q. How does FutureBeeAI ensure informed consent in underrepresented locales?

A. FutureBeeAI prioritizes clear communication and transparency. We use our Yugo platform to provide participants with detailed information about data usage, ensuring they can make informed decisions. Consent is always explicit, and participants have the right to withdraw at any time.

Q. What measures does FutureBeeAI take to address bias in data collection?

A. We implement inclusive sampling methods and bias-awareness training for our teams. Our processes ensure demographic representation and diversity in data, minimizing bias and enhancing the fairness of AI models.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!