How does the platform support code-mixed TTS evaluation?

TTS

Multilingual

Speech Synthesis

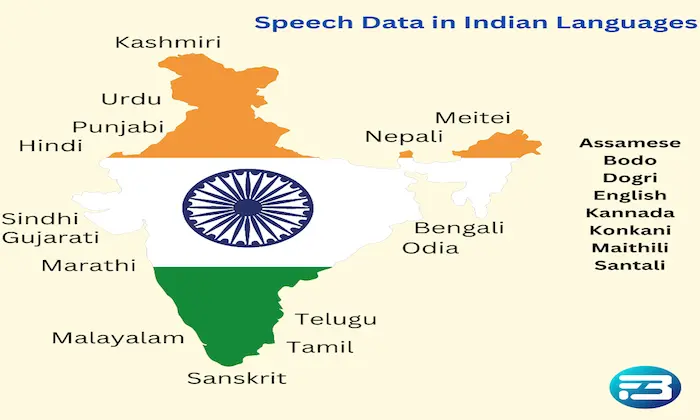

In today's interconnected world, the seamless integration of multiple languages within a single conversation is known as code-mixing, has become increasingly common. For AI engineers and product managers, ensuring that Text-to-Speech (TTS) systems handle these multilingual inputs with precision is not just a technical challenge but a critical business need. Let's explore the practical strategies for evaluating code-mixed TTS, focusing on accuracy, user trust and the operational realities facing AI practitioners today.

The Importance of Code-Mixed TTS Evaluation

Imagine a customer service hotline where the agent fluidly switches between English and Spanish. A TTS system that stumbles over such code-mixed dialogue can lead to misunderstandings, eroding user trust. As global communication trends towards multilingualism, TTS systems must be evaluated for their ability to handle these language switches naturally. Mispronunciations or unnatural pauses can detract from user experiences, making robust evaluations paramount.

Key Strategies for Rigorous Code-Mixed TTS Evaluation

Attribute-Level Feedback: Evaluating TTS systems on specific attributes like naturalness, prosody, and pronunciation accuracy is crucial. For example, subtle shifts in tone or stress during language transitions can result in significant misunderstandings. Using attribute-wise structured tasks enables evaluators to identify where models may falter in code-mixed scenarios, ensuring these systems meet user expectations.

Native Speaker Engagement: Involving native speakers from all relevant languages is essential. They provide deep insights into contextual correctness and emotional nuances that non-native evaluators might miss. Consider a TTS system that perfectly pronounces a word in French but misses the emotional undertone when mixed with English. Native evaluators can pinpoint these discrepancies, guiding refinements.

Continuous Evaluation Mechanisms: TTS systems evolve and can drift over time, particularly after updates or changes in training data. Our platform employs continuous evaluation processes, including regular benchmarking and feedback loops, to maintain high standards in code-mixed scenarios. This proactive approach helps detect and correct any silent regressions, those subtle quality declines that might otherwise go unnoticed.

Practical Takeaway

Successfully evaluating code-mixed TTS models demands a multi-faceted strategy encompassing rigorous attribute evaluations, native speaker involvement, and ongoing monitoring. These practices not only highlight system weaknesses but also mitigate the risks of silent regressions, which can lead to user dissatisfaction.

For those looking to refine their TTS evaluation processes, FutureBeeAI offers flexible methodologies and robust quality assurance tools tailored to meet the diverse needs of global audiences. By leveraging our platform, your team can ensure that your TTS systems deliver a harmonious multilingual experience, bolstering user trust and satisfaction. Visit FutureBeeAI to discover how we can support your next TTS project with precision and insight.

By focusing on these strategies, AI practitioners can master the challenges of code-mixed TTS evaluation, ensuring that no voice gets lost in translation.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!