If Your Model Hasn’t Heard a Hospital, It Won’t Survive in One

A model that aces studio tests can still fail on its first day in a clinic. Imagine a cardiologist dictating a discharge summary while an IV pump alarms, a nurse asks a question, and medication names are corrected mid-sentence, all on a mobile device in a noisy environment. Clinical reality is messy, human, and acoustically variable. Production medical transcription systems must handle exactly this kind of unpredictability, yet studio recordings and synthetic voices rarely prepare a model for it.

This is why authentic doctor dictation datasets matter: they teach models real clinical speech, not just scripted audio. But sourcing authentic audio collides with responsibility. Hospital dictations are packed with protected health information (PHI), tangled with legal constraints, and vary widely in acoustic quality. Most hospitals cannot release large volumes of native dictation due to patient privacy and compliance requirements.

FutureBeeAI meets this challenge differently. We preserve clinical realism by having verified physicians record dictations from medically accurate scenarios using dummy patient details. Every file incldudes rich metadata, making it auditable and configurable. The goal: provide ML teams with real-world speech patterns their models need while keeping data fully compliant and adaptable for specific project needs.

In the sections that follow, I’ll unpack what authentic clinical dictation sounds like, why it matters for model performance, and how FutureBeeAI captures that authenticity responsibly. We’ll explore the operational choices: contributor verification, acoustic mixes, metadata, and PII (personally identifiable information) tagging which makes this approach practical for production-grade ASR.

The Authenticity Paradox is Powerful Yet Problematic

What Real Doctor Dictation Actually Sounds Like

What Real Doctor Dictation Actually Sounds Like

Clinical dictation isn't one thing; it's a set of predictable, recurring patterns, layered with variability. Recordings include device handling noise, background sounds from hallway traffic and hospital equipment, and input artifacts from phones and tablets. Telemedicine adds bandwidth compression and signal effects.

Semantic complexity is just as important. Clinicians mix jargon, abbreviations, and narrative reasoning, with mid-sentence corrections ("...no, change that to..."), shorthand orders, and domain-specific emphasis. Accents and non-native pronunciations are common; datasets lacking this diversity risk biasing models toward certain speaker groups. These “noisy” features are not errors; they are essential signals models must learn to interpret.

Why Authentic Audio Trains Superior Speech Models

Models trained on deployment-like data perform more robustly. Exposure to acoustic variability builds resilience: systems can separate clinical terms from environmental noise, tolerate device artifacts, and stay accurate across telephony or mobile inputs. Familiarity with real clinician phrasing enables models to handle corrections and disfluencies, which is key for reliable transcription and structured data extraction.

Models trained on deployment-like data perform more robustly. Exposure to acoustic variability builds resilience: systems can separate clinical terms from environmental noise, tolerate device artifacts, and stay accurate across telephony or mobile inputs. Familiarity with real clinician phrasing enables models to handle corrections and disfluencies, which is key for reliable transcription and structured data extraction.

Why Native Clinical Audio Can't Be Used at Scale?

The qualities that make hospital dictations valuable also make them risky. Real recordings embed patient names, dates, medications, and other direct identifiers. HIPAA, GDPR, and other legal frameworks require strict controls on such data. Historical dictations often lack documented consent, so they can't be repurposed for modern datasets. Hospitals usually can’t share large streams of native dictation without overhauling workflow and consent across departments.

This is the paradox: the most helpful audio is usually the least shareable. Solving it demands engineering controls that block PHI while keeping the signal clinically real.

The Realistic Without Risk

FutureBeeAI resolves this with a process rooted in responsible data collection. Verified physicians from all major specialties record free-form dictations based on medically valid scenarios, a interways using dummy patient details. Recordings are captured across a planned acoustic mix, typically targeting 30–40% noisy samples unless clients request a different ratio, and using a range of mobile devices to maintain realistic signal conditions.

Every file includes metadata (device type, noise profile, specialty, prompt ID, consent timestamp), allowing ML teams to filter and evaluate precisely. For example, a team can benchmark model performance on telemedicine-style noisy recordings or compare across devices.

How Authentic-Style Ethical Simulation Works?

The pipeline follows clear, auditable steps:

- Medically valid scenarios are generated and labeled by specialty.

- Credentialed clinicians record dictations using client-specified device and environment mixes.

- Metadata capture occurs automatically and via manual validation in Yugo, logging device, environment, contributor ID.

- Medical reviewers check terminology, case logic, and dummy patient detail adherence.

- Transcription workflows apply PII tagging to flag and remove any accidental identifiers.

- Optional voice anonymization is applied for projects requiring further privacy.

This lets us deliver audio that preserves genuine clinical phrasing and acoustic diversity yet stays PHI-free, traceable, and configurable. Specialty coverage, language, accent targets, device mix, and noise ratios are all customizable for ML project requirements.

Acoustic Realism → Better Robustness

Acoustic Realism → Better Robustness

Models trained on just clean or synthetic speech are brittle in real deployment, breaking down with hallway noise, mobile artifacts, or telemedicine compression. Authentic-style datasets, spanning real devices in planned acoustic proportions (often 30–40% noise as the client’s demand), prepare systems for the full spectrum of live clinic environments. For product teams, it's fewer edge-case failures and more consistent ASR output.

Semantic Depth → Superior Medical Understanding

Synthetic speech may hit the right words but misses the real-world narrative, corrections, and domain logic clinicians use. Real dictations teach ASR systems how clinical language flows, so models recognize abbreviations and medical jargon even when phrased non-linearly or with corrections.

Diversity → Lower Bias and Generalization Risk

Bias creeps in when models are trained with overly clean or narrow datasets. By sourcing dictations from verified global clinicians with balanced accent representation and specialty coverage, FutureBeeAI ensures models generalize fairly, essential for telemedicine and multinational healthcare.

Measurable Gains

Metrics like WER (word error rate) and MC-WER (medical context WER) typically improve when authentic clinical dictation is added to the training mix. For instance, in a pilot, a transcription model built with FutureBeeAI’s dataset saw notable reductions in medical term misinterpretations and editing needs, even in noisy, live-clinic conditions.

Compliance, Trust, and Traceability are The Backbone of Ethical Medical Audio

HIPAA/GDPR Compliance by Design

Compliance starts before recording. Physicians are trained on dummy patient details and never use real PII. Consent collection is handled via multilingual workflows in the Yugo platform. Data minimization is foundational; only the essentials are gathered, with triple-layer safeguards:

- Avoiding PHI at the source, via training.

- Medical reviewer checks for compliance.

- PII tagging is an extra layer to make transcriptions PII free.

Yugo operationalizes contributor onboarding, device monitoring, metadata capture, accent validation, and audit logging. Every session is transparent, with project level consent logs and credential verification (medical licenses, role). For example, a client can audit all neurology recordings captured in India by device, accent, and clinician type.

Audio De-Identification and Voice Anonymization

After PHI-avoidance protocols, audio undergoes PII tagging and reviewer checks. Optional voice anonymization is available for clients prioritizing clinician privacy, ensuring realism without identity risk.

Workflow Recap:

- Dummy PII in scenario prompt.

- Clinicians record dictation.

- Medical reviewers check adherence.

- PII tagging during transcription.

- Optional voice anonymization.

Authenticity With Governance

The result: a compliant audio collection that is metadata-rich, globally auditable, and safe for clinical AI. Datasets scale confidently without PHI or governance risks.

Real-World Impacts in Better Data & Tangible Improvements

Medical Transcription AI

Medical Transcription AI

Clinicians dictate at speed; editors have limited time. Models built on authentic speech better capture specialty terms, handle corrections, and reduce editing needs. Example: In a real outpatient deployment, authentic-style models reduced post-edit time for clinical staff by cutting context breaks and misinterpretation of domain abbreviations.

Ambient Clinical Documentation

Ambient systems need stability and resilience for multi-speaker, real-time clinical encounters. Authentic-style data with planned acoustic diversity improves reliability in bedside note generation and live patient conversations.

Multilingual and Accent-Rich ASR

With balanced accent quotas and broad specialty coverage, FutureBeeAI’s physician network delivers data that enables models to perform consistently across language boundaries and cross-border telemedicine.

Telemedicine, Mobile & Edge Devices

Training on mobile and telemedicine recordings, with real environmental noise and device artifacts, ensures models deliver reliable, consistent performance even in variable signal conditions.

FutureBeeAI’s Operational Excellence at Scale!

FutureBeeAI goes beyond simulated dictation. Our edge is:

- Global, verified clinicians: Contributors are credentialed medical professionals, providing clinical logic and specialty phrasing.

- Multilingual network: Over 100 languages and controlled accent balance prevent regional bias.

- End-to-end QA: Specialists validate terminology, logic, and PHI exclusion.

- Transparent metadata: Device type, environment, specialty, accent, and consent timestamp for every file, supporting granular analysis and configuration.

- Custom collections: Datasets tailored to specialty, device, noise, and language requirements with only naturally occurring acoustic conditions.

As the industry evolves, it’s crucial to separate what’s genuinely possible from what’s simply assumed. Despite all the progress in medical speech AI, persistent misunderstandings often lead to flawed strategies and unreliable results. Before wrapping up, let’s challenge the most common misconceptions about healthcare speech data and clarify what truly works in practice and in production.

Correcting Industry Misconceptions

Misunderstandings about clinical speech data shape how teams build and misbuild medical ASR systems. Here are the myths we encounter most often, along with the grounded reality.

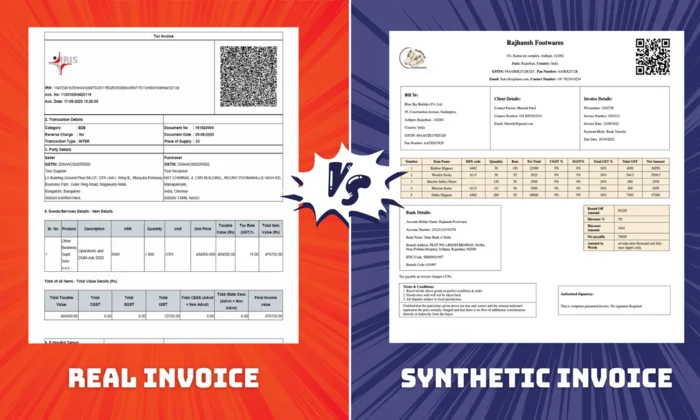

Myth 1: “Synthetic data is enough for training medical ASR.”

Reality: Synthetic voices cannot reproduce real clinician cadence, corrections, shorthand, or acoustic chaos. Models trained on synthetic inputs collapse when exposed to clinical variability.

Myth 2: “Simulated doctor dictation is always realistic.”

Reality: Many simulated datasets rely on unscripted clinical speakers. Authenticity requires real physicians, medically valid scenarios, dummy PII, and realistic device and noise conditions; otherwise, the audio becomes predictable and unrepresentative.

Myth 3: “HIPAA compliance only means redacting PHI from transcripts.”

Reality: Compliance involves protecting spoken identifiers, minimizing PII at the source, applying PII tags, and offering voice anonymization. Text redaction is only one piece.

Myth 4: “General ASR models can handle medical speech.”

Reality: General-purpose ASR breaks on specialty jargon, drug names, abbreviations, and clinical reasoning patterns. Domain-specific data is non-negotiable.

Myth 5: “More hours automatically mean better models.”

Reality: Quality and diversity consistently outperform raw hours. A smaller set of clinically authentic speech often beats thousands of hours of generic or synthetic audio.

These misconceptions create false confidence and brittle systems, exactly what clinical AI cannot afford.

Authenticity Elevated, Ethics Embedded

Healthcare speech AI performance is determined by the quality and realism of the data it learns from. Clean studio recordings and synthetic voices don’t reflect clinical reality; raw hospital dictations are too sensitive for ethical use.

FutureBeeAI’s clinically authentic, ethically simulated doctor dictation datasets solve this problem in integrating compliance and reviewability into every layer. With verified global clinicians, multilingual coverage, specialty depth, and transparent metadata, we set a new standard for reliable, responsible healthcare speech data.

If you’re building medical transcription AI, telemedicine ASR, or multilingual clinical speech solutions, contact FutureBeeAI to pilot a collection tailored to your specialty, language, and acoustic needs.

What Real Doctor Dictation Actually Sounds Like

What Real Doctor Dictation Actually Sounds Like Models trained on deployment-like data perform more robustly. Exposure to acoustic variability builds resilience: systems can separate clinical terms from environmental noise, tolerate device artifacts, and stay accurate across telephony or mobile inputs. Familiarity with real clinician phrasing enables models to handle corrections and disfluencies, which is key for reliable transcription and structured data extraction.

Models trained on deployment-like data perform more robustly. Exposure to acoustic variability builds resilience: systems can separate clinical terms from environmental noise, tolerate device artifacts, and stay accurate across telephony or mobile inputs. Familiarity with real clinician phrasing enables models to handle corrections and disfluencies, which is key for reliable transcription and structured data extraction. Acoustic Realism → Better Robustness

Acoustic Realism → Better Robustness Medical Transcription AI

Medical Transcription AI